Fast analysis, better insight and rapid deployment with minimal IT involvement: these are among the benefits of in-memory analytics, but different products are appropriate for different environments. Read our in-depth report on in-memory technologies.

There was a time when the select few business intelligence users within your organization were happy to get a weekly report. Today, smart companies are striving to spread fact-based decision making throughout the organization, but they know they can't do it with expensive, hard-to-use tools that require extensive IT hand holding. The pace of business now demands fast access to information and easy analysis; if the tools aren't fast and easy, business intelligence will continue to have modest impact, primarily with experts who have no alternative but to wait for an answer to a slow query.

In-memory analytics promise to deliver decision insight with the agility that businesses demand. It's a win for business users, who gain self-service analysis capabilities, and for IT departments, which can spend far less time on query analysis, cube building, aggregate table design, and other time consuming performance-tuning tasks. Some even claim that in-memory technology eliminates the need for a data warehouse and all the cost and complexity that entails.

There's no doubt that in-memory technology will play a big part in the future of BI. Indeed, vendors ranging from Microsoft and MicroStrategy to Oracle and IBM are joining the in-memory bandwagon. Yet no two products deliver in-memory capabilities in the same way for the same business needs.

This article, which is an executive summary of an Intelligent Enterprise report entitled "Insight at the Speed of Thought: Taking Advantage of In-Memory Analytics," helps you understand the value of in-memory technologies. To download the free report, which includes quick takes on ten leading products, six "Reality Checks" on product suitability and selection, and four points of strategic planning advice, register and download here.

In-Memory Advantages

Business users have long complained about slow query response. If managers have to wait hours or even just a few minutes to gain insights to inform decisions, they're not likely to adopt a BI tool, nor will front-line workers who may only have time for gut-feel decision-making. Instead they'll leave the querying to the few BI power users, who will struggle to keep up with demand while scarcely tapping the potential for insight. In many cases, users never ask the real business questions and instead learn to navigate slow BI environments by reformulating their crucial questions into smaller queries with barely acceptable performance.

Such was the case at Newell Rubbermaid, where many queries took as long as 30 minutes. An SAP ERP and Business Warehouse user, the company recently implemented SAP's Business Warehouse Accelerator (BWA), an appliance-based in-memory analysis application. With BWA in place, query execution times have dropped to seconds.

"Users are more encouraged to run queries that sum up company-level data, which may entail tens of millions of rows, yet they're not worried about killing [performance]," says Yatkwai Kee, the company's BW administrator. Business users can now quickly and easily analyze data across divisions and regions with queries that previously would have been too slow to execute. Beyond the corporate world, in-memory BI tools allow state agencies and cities to stretch tax dollars further while improving services. For example, the Austin, Texas, fire department serves over 740,000 residents and responds to more than 200 calls a day. The department recently deployed QlikTech's QlikView to better analyze call response times, staffing levels and financial data. QlikTech is an in-memory analytic application vendor that has been growing rapidly in the last few years. With QlikView, users can get to data in new ways and perform what-if analysis, which the department says has helped in contract negotiations.

And benefits go well beyond the fire department. "Unless we spend more efficiently, costs for safety services will take a larger share of tax dollars, making less budget available for services such as libraries and parks," says Elizabeth Gray, a systems supervisor. Gray says that attendance and payroll data come from different systems and never seemed to make the priority list in the central data warehouse. "With QlikView, we can access multiple data sources, from multiple platforms and different formats," she says. "We can control transformations and business logic in the QlikView script and easily create a presentation layer that users love."

In many cases, in-memory products such as QlikView and IBM Cognos TM1 have been deployed at the departmental level, because central IT has been too slow to respond to specific business requirements. A centralized data warehouse that has to accommodate an enterprise's diverse requirements can have a longer time-to-value. Demand for in-memory is also strong among smaller companies that lack the resources or expertise to build a data warehouse; these products offer an ideal alternative because they can analyze vast quantities of data in memory and are a simpler, faster alternative to relational data marts.

A number of the tools that use in-memory approaches facilitate a more exploratory, visual analysis. Vendors TIBCO Spotfire, Tableau Software and Advizor Solutions, for example, take an in-memory approach that offers a stark contrast to many query and OLAP products; instead of starting with a blank screen to build a query or a report, users start with a view of all the data. Held in memory, this data is then filtered down to just the information users are looking for with easy-to-use data selection, sliders, radio boxes and check boxes.

How It Works

As the name suggests, the key difference between conventional BI tools and in-memory products is that the former query data on disk while the latter query data in random access memory (RAM). When a user runs a query against a typical data warehouse, for example, the query normally goes to a database that reads the information from multiple tables stored on a server's hard disk (see "Query Approaches Compared," below).

Click Image To View Larger Version

Query Approaches Compared

With in-memory tools, all information is first loaded into memory. If the in-memory tool is server-based, an administrator may initiate the load process; if it's a desktop analysis tool, the user may initiate the process on his or her workstation. Users then query and interact with data loaded into the machine's memory. Accessing data in memory is literally millions of times faster than accessing data from disk. This is the real, "speed-of-thought" advantage that lives up to all the hyperbole.

In-memory BI may sound like caching, a common approach to speeding query performance, but in-memory products don't suffer from the same limitations. Those caches are typically subsets of data, stored on and retrieved from disk (though some may load into RAM). The key difference is that the cached data is usually predefined and very specific, often to an individual query; but with in-memory tools, the data available for analysis is potentially as vast as an entire data mart. Another approach to solving query performance problems is for database administrators (DBAs) to analyze a query and then create indexes or aggregate tables in the relational database. When a query hits an aggregate table, which contains only a subset of the data, it may scan only a million records rather than the hundreds of millions of rows in a detailed fact table.

Yet one more route to faster performance is to create a MOLAP (Multidimensional Online Analytical Processing) database. But whether it's tuning or MOLAP, these paths to user-tolerable performance are laborious, time consuming and expensive. DBAs are often versed in performance tuning for transaction systems, but the ability to tune analytic queries is a rarer skill set often described as more art than science. What's more, indexes and aggregate tables consume costly disk space. In-memory tools, in contrast, use techniques to store the data in highly compressed formats. Many vendors and practitioners cite a 1-to-10 data-volume ratio when comparing in-memory systems to traditional, on-disk storage.

So while users benefit from lightning-fast queries, in-memory BI is also a big win for BI system administrators. Newell Rubbermaid, for example, says its BWA deployment has eliminated significant administrative time that was formerly required to tune queries. "The queries were so fast [after the in-memory deployment], our users thought some data must have been missing," says Rajeev Kapur, director of business analytics at Newell Rubbermaid. Yet the performance improvement didn't involve analysis of access paths or creation of indexes or aggregate tables as were previously required. Options Emerge

In-memory products and capabilities are gaining momentum. TM1 (formerly Applix, which was acquired by IBM Cognos in 2007) was one of the early innovators of in-memory analytics in the 1980s. Beyond super-fast analysis, one of the compelling features of TM1 and a few other in-memory tools is the ability to perform what-if analysis on the fly. For example, users can input budget values or price increases to forecast future sales. The results are immediately available and held in memory. In contrast, most disk-based OLAP tools would require the database to be recalculated, either overnight or at the request of an administrator.

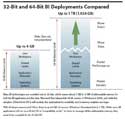

Adoption of in-memory tools was initially held back by both the high cost of RAM and limited scalability. Just a few years ago, 1 GB of RAM topped $150, whereas today it costs only about $35 compared. So a powerful analytics server with 64 GB of RAM that used to cost $64,000 today costs only about $13,000. The increasing prevalence of 64-bit operating systems (OSes) also makes in-memory analytics more scalable. Conventional 32-bit OSes offer only 4 GB of addressable memory, whereas 64-bit OSes support up to 1 TB of memory (as a reminder, 1 TB = 1,024 GB). The impact of this difference is enormous. Once the OS, BI server and metadata of a typical BI system are loaded in a 32-bit environment (see "32-bit and 64-bit BI Deployments Compared"), there's not much memory left for the data that users want to query and analyze. In a 64-bit operating system with 1 TB of addressable memory, many companies can load multiple data marts if not their entire data warehouse into RAM.

Click Image To View Larger Version

32-Bit and 64-Bit BI Deployments Compared

Addressable RAM is certainly a key ingredient in the success of in-memory analytics, but adoption of 64-bit OSes will accelerate adoption exponentially. HP first released a 64-bit version of Unix in 1996, and Microsoft first released the 64-bit version of Windows in 2005. In the classic chicken-and-egg scenario, customers have been slow to adopt 64-bit OSes, so support from BI vendors has been mixed. Support for 64-bit OSes makes in-memory BI enterprise-scalable, but it is by no means a prerequisite to an in-memory deployment. Many customers are deploying in-memory BI tools on 32-bit OSes, but these systems are more likely to be geared to work groups and individuals working with smaller amounts of data.

There are signs that adoption of 64-bit OSes and in-memory deployments are picking up steam. In-memory BI vendor QlikTech, for example, has reported sales growth rates of more than 80% per year over the last several years, while the BI market's overall growth over that same period averaged 10%. What's more, even the largest, most well-established vendors are adding in-memory to their platforms. SAP, for example, released the Business Warehouse Accelerator in 2006. IBM Cognos acquired Applix and its TM1 product in 2007. In March of this year, MicroStrategy released MicroStrategy 9, which introduces an in-memory option as part of the platform's OLAP Services. Then there is the much-anticipated Project Gemini release from Microsoft. Due in 2010, Gemini is an enhancement to Excel and Analysis Services that will bring in-memory analytics to spreadsheet users, enabling users to slice and dice through millions of records with promised sub-second response times.

Relational database vendors have also acquired in-memory capabilities. Oracle acquired TimesTen in 2005, and IBM acquired Solid DB in 2008. However, neither vendor has articulated a clear strategy on whether these technologies will be used only to speed transaction processing or also be used to speed data analysis.

Product Selection and Deployment Strategy

Now that you understand the value of in-memory technologies, download the "Insight at the Speed of Thought: Taking Advantage of In-Memory Analytics" report for the analysis of ten leading products, six "Reality Checks" on product suitability and selection, and four key points of strategic planning advice. The report can be downloaded "by registering for the free download here.

About the Author(s)

You May Also Like