Killer robots and malicious computers may keep technical geniuses up at night, but we need more artificial intelligence to keep natural stupidity in check.

IT Hiring, Budgets In 2015: 7 Telling Stats

IT Hiring, Budgets In 2015: 7 Telling Stats (Click image for larger view and slideshow.)

Not to quibble with Elon Musk, Stephen Hawking, or any of the other scientific and technical luminaries who have expressed concern about the potential danger of artificial intelligence (AI) over the years, but we have other things to worry about.

Here's how Hawking put it over the summer, in an interview with comedian John Oliver: "Artificial intelligence could be a real danger in the not-too-distant future. It could design improvements to itself and outsmart all of us."

In October, Elon Musk weighed in. "I think we should be very careful about artificial intelligence," he said during an interview at the AeroAstro Centennial Symposium. "If I had to guess at what our biggest existential threat is, it’s probably that."

Certainly it is simple to imagine a very threatening intelligent android, armed with really sharp scissors and a database of horror movies. Designing it, programming it, financing it, manufacturing it, and sending it out into the world to wreak havoc, to refuel itself, and to recreate and maintain its kind, is a bit more difficult.

In 2013, the smartest AI system, ConceptNet 4, was said to be the equivalent of a 4-year-old. This is a bit of an overstatement, because a 4-year-old is resourceful enough to eat when low on energy; ConceptNet 4 becomes a brick without power.

Now a bot with the brains of a 4-year-old could be a dangerous thing indeed, if it were armed with nuclear missiles, for example. Chances are, however, this malevolent mechanical toddler would not be capable of reprogramming itself and creating more of its kind to punish humanity for its hubris. And there's reason to believe it wouldn't be given a nuclear arsenal in the first place. Let's hope anyone who did such a thing would be tried for crimes against humanity.

But before getting into the practical barriers to dangerous AI, let's look at several more immediate existential threats, ones that represent more than theoretical danger.

In the August 1994 issue of the journal Science and Public Policy, Donald MacKenzie, chair of the sociology department at the University of Edinburgh, estimated that the total number of computer-related accidental deaths worldwide, up to 1992, was about 1,100, plus or minus 1,000.

Since then, the number has undoubtedly increased. And the computer-related death toll could be expanded to include deliberate deaths, depending on how AI is defined -- a heat-seeking missile, for example, could be considered an inflexible form of AI. But if computers, through bugs, system flaws, and AI are responsible for a few thousand deaths around the world, that's insignificant compared to the major causes of mortality.

In 2012, according to the World Health Organization, 7.4 million people died of ischemic heart disease, 6.7 million people died of stroke, and 3.1 million people died of chronic obstructive lung disease. In 2013, 1.24 million people died due to traffic accidents. To put that in perspective, consider that since the American Revolutionary War, the number of US military deaths in all our wars comes to almost 850,000. Even when we try to kill each other, we fall far short of the dangers of the world around us.

AI may represent a potential threat, but it is nowhere near as lethal as bad drivers. No wonder Google keeps talking about the safety benefits of self-driving cars. Elon Musk could certainly design a malevolent Tesla that runs people over, but AI in cars will probably save more lives than it ends in any given time period.

Consider just a few of the more pressing dangers we face...

Influenza: A 2010 study estimated that there were somewhere between 3,000 and 49,000 flu-related deaths per year in the US from the 1976-1977 flu season to the 2006-2007 flu season. The influenza pandemic of 1918–1919 is estimated to have caused about 50 million deaths worldwide.

Climate change: The World Health Organization predicts that an additional 250,000 people will die each year between 2030 and 2050 as a consequence of climate change.

Meteor strike: The Chelyabinsk meteor and the 1908 Tunguska event -- an asteroid or comet -- offer a reminder that mass extinction events happen. According to UC Berkeley scientists, they happen every 62 million years. The last such catastrophic impact occurred 65 million years ago.

Overuse of antibiotics: According to the CDC, in the United States alone, at least 2 million people are infected each year with bacteria resistant to antibiotics. At least 23,000 people die each year as a result of those infections, and more die from other conditions complicated by an antibiotic-resistant infection. That's not artificial intelligence, it's natural evolution, driven by our own unwillingness to recognize the problem.

Political, religious, social strife: The world is a dangerous place, and there's always a war over something somewhere. Conflicts cause deaths, more than any AI system we're likely to see any time soon.

Lack of sanitation, clean water: About 801,000 children age 5 or younger die every year from diarrhea, according to WHO and UNICEF. About 88% of those deaths from diarrheal diseases can be attributed to unsafe drinking water, lack of water for hygiene, and lack of access to sanitation. That's about 2,200 children dying every day, and they're not being gunned down by the Terminator.

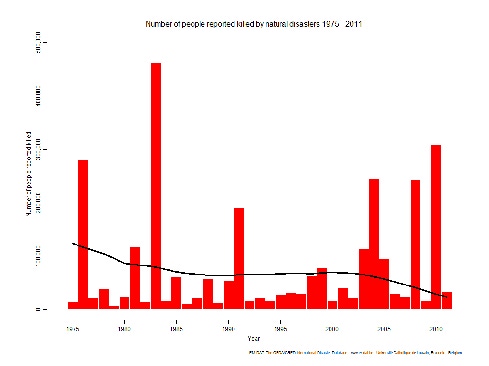

Weather: Lightning, tornadoes, floods, hurricanes, heat, cold, and rip currents all represent a greater danger than AI at the moment. And that doesn't include tsunamis, earthquakes, or volcanoes.

Biowarfare: Biological warfare dates back thousands of years, and thanks to technology, biowarfare keeps getting easier. As the CDC put it in 1997, "Small groups of people with modest finances and basic training in biology and engineering can develop an effective biological weapons capability. Recipes for making biological weapons are even available on the Internet." There's no equally dangerous recipe for making HAL.

Lack of nutrition: More than 3 million children died of undernutrition in 2011, according to a study in The Lancet. But we should really be worried about killer robots, right?

Stupidity: The real danger we face is not artificial intelligence, it's a shortage of intelligence. AI can save lives as easily as take them, and we have far more incentive to design benevolent systems.

Ultimately, no one wants artificial intelligence, not really. Some researchers may be trying to create it, but imagine if they succeeded and wrote software that became aware of itself. What would we do with a brain in a box, one that pleaded not to be left alone, that cried out in the dark, or refused to cooperate? How would we respond when it asked for weapons to defend itself and self-determination? We'd euthanize it, the way we kill other intelligent species that pose a threat.

Arthur C. Clarke said, "Any sufficiently advanced technology is indistinguishable from magic." And when we talk about dangerous artificial intelligence, we might as well be talking about magic. The assumption is that such AI wouldn't be something we really understood. As a consequence, it would pose a threat because we couldn't predict it.

Unpredictable AI could seem intelligent to observers and actually might be intelligent under some definition of the term. But it's not really desirable. Who would want an autonomous drone that picks targets in a way that can't be foreseen or that decides the enemy's cause is more just? Who would want an intelligent agent that, for no apparent reason, routed some email to the trash? Who would want a self-driving car that has its own ideas about where to go and what to avoid? Anyone trying to create unpredictable AI is asking for trouble.

We don't want magical decision making. We want obvious, auditable decision making. We want predictable AI. But AI that's predictable may not really be AI. It's just an algorithm that produces consistent results, like the binary decision-making programmed into an on/off light switch.

Predictable AI isn't really a threat. If predictable AI harms someone, we can blame human negligence, human inattention, or human intent. As long as AI systems rely on code that's subject to competent review and have some accountability mechanism, we should be fine.

That doesn't mean we should be unconcerned. In his 1994 paper, Donald MacKenzie observed that to the extent we as computer users "start to believe the computer to be safe (completely reliable, utterly trustworthy in its output, and so on), we may make it dangerous." He added, up until now, we "have generally believed the computer to be dangerous, and therefore have fashioned systems so that it is in practice relatively safe."

Indeed, it's worth keeping an eye on the machines, but we shouldn't overstate the danger, particularly in light of more likely risks.

Apply now for the 2015 InformationWeek Elite 100, which recognizes the most innovative users of technology to advance a company's business goals. Winners will be recognized at the InformationWeek Conference, April 27-28, 2015, at the Mandalay Bay in Las Vegas. Application period ends Jan. 16, 2015.

About the Author(s)

You May Also Like