The Courtroom Factor in GenAI’s Future

Developers of generative AI as well as businesses that use it may be targets of liability when plaintiffs cry foul of the technology.

With each push generative AI (GenAI) makes into business and consumer spaces, legal questions arise largely around copyright, ownership, and privacy.

Do proponents of GenAI have a right to use the resources they train their algorithms on? Can creators of content and data refuse to allow their works to be ingested by GenAI? Who owns GenAI creations? Should derivative GenAI works fall under copyright protection? Does GenAI, it its pursuit of more and more training data, put privacy in jeopardy?

The litany of legal issues associated with GenAI, what it learns from, and what it produces may continue to grow as existing laws get put to the test and new regulations are discussed. The New York Times took OpenAI and Microsoft to court claiming infringement of its copyright, a stance that some refute based on an assertion that copyright law was not meant for the licensing of training data.

In addition to news outlets, other creators such as artists and actors have sought to halt GenAI from scraping their works or likenesses to develop content without their say or compensation. Further, there are already insidious examples of GenAI used to create deepfakes to defame and damage the image of individuals, including the production of completely fabricated derogatory, pornographic images meant to denigrate celebrities such as Taylor Swift.

For all of its potential, GenAI faces legal challenges that may shape its future in ways its proponents did not foresee.

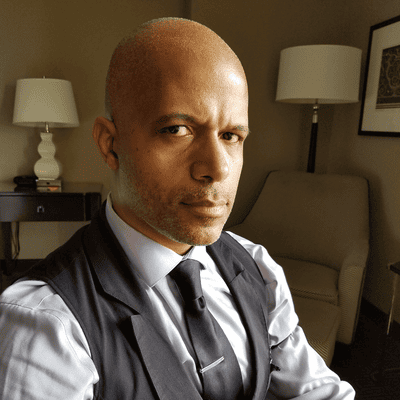

Christopher J. Valente, partner with law firm K&L Gates, shares some insights with InformationWeek about some of GenAI’s legal quandaries.

ChatGPT and generative AI launched us into this new arena with questions of what is OK, what is not OK, and what laws already cover this space. Do we go back to the legal drawing board? As different implementations of AI start to hit, are people raising their hands to say, “I don’t think so?”

Much of what there is to talk about to date in sort of the litigation space are cases that have been brought against developers of these generative AI tools, and I think you put your finger on it. ChatGPT arrives on the scene and plaintiffs’ lawyers are bringing claims that tend, at least initially, to focus on the inputs of generative AI tools, and that’s primarily the training data, and then the outputs, what’s actually being generated.

Plaintiffs have alleged a wide variety of theories -- copyright infringement is one of the leading claims that you see, and I think that we’ve started to see courts issue decisions on some of these relatively foundational issues. One question that I think will be answered in 2024 that hasn’t really been answered yet is the use of data, of materials, of -- in certain instances -- copyrighted works by these developers to train AI tools.

So, I think that’s going to be the big open question that we’re going to start to see answers to in 2024 and those questions right now -- these are the headlines. Does the use of copyrighted work as training data violate intellectual property protections? Does it constitute fair use? Is there something inherently unfair about using these works, and really anything users put on the internet, to train these AI models? I think we’re going to start to see these questions answered this year.

How much haggling or wrangling has been going on to say whether current laws on the books are sufficient or not sufficient? Whether or not they’re up to the task of really answering these questions? At a local, state, or national level, there are different conversations happening about getting our arms around this in terms of policy. But with what’s on the books now, is existing policy kind of like a dinosaur?

Most everyone -- and this is plaintiffs’ lawyers, this is regulators writ large -- is relying on existing laws to regulate the use of generative AI.

Christopher J. Valente, K&L Gates

Are they always a perfect fit? I think some folks would say no. I think those that are pursuing the claims or are regulating are going to figure that out. The claims that you see in the litigation that’s been filed -- these are based on the laws that are in place and whether that’s the copyright laws or the unfair and deceptive practices acts that are in place throughout the country or otherwise. I think one of the things that we’re going to see is the courts try to sort this out. The courts will answer that question. In my opinion, I think you’ve seen some courts express skepticism on some of the claims brought pursuant to some of the existing laws. Of course, the counterpoint to that is that many of these cases are still at very early stages, and so the burden on the plaintiffs is relatively light. There are one or two outliers in terms of litigation that’s out there that may give us some early, more concrete guidance, particularly on the data training question, but by and large, both plaintiffs and regulators are relying on the existing laws to bring their claims against developers and others.

How comparable, or not at all, is this to when digital content, particularly in the music industry, faced arguments of ownership and piracy in the earlier days of the Internet -- when the first iteration of Napster and other filesharing applications were out? There was that crusade to clamp down on those things -- are we in a comparable kind of space now or is it different?

I’m not a technology expert; I'm not a technologist, but I feel like the technology here is different and it’s different in how generative AI functions both, as I mentioned earlier, on the input side and the output side. Looking at it from the legal lens that it may not be apples to apples here.

Have any of the GenAI cases had an inkling of precedence being set or are we still figuring things out across all of these cases that are being argued right now?

I think that you are starting to see some trends. I think courts are holding these claims to the traditional standard at the motion to dismiss stage, which is the early stage they’re at. But there are a few cases that are further ahead, and I think we’re starting to understand what courts will and will not tolerate in terms of pleading claims, and we’ll see how far these cases get.

Is there anything out there right now that’s precedential? I think folks would argue that there is and, there’s already some good precedent but, it’s early days.

From what you have seen in terms of cases out there, have any of them sought to essentially bring the guillotine down on this technology? Are there nuanced questions being asked versus just seeking a cease and desist?

You see cases that are running the gamut. I think various parties have taken different strategic approaches to what they’re asking for. I think fundamentally the relief requested goes to the heart of how these generative AI models work, and if the courts decide that, “No, the generative AI model should not have been set up that way, or cannot work that way,” then I think they’ll have to shift the model. Early cases or a few early cases invoked the end times, invoked these very negative things but the cases and complaints have run the gamut.

What are you trying to pay attention to in terms of what’s happening in this litigation landscape? Is it a matter of watching what’s happening on the international stage? Looking more domestic US, potentially at a national level? Local? Or are you trying your best to be abreast of all of it right now because so many different things are happening?

There are a lot of moving parts. You kind of hit that on the head. Certainly, every day there’s something new, some development, but let me focus on my area of expertise, which is litigation and where I see some of the domestic generative AI litigation perhaps trending or where I think we’re going to see an increase in litigation going forward. I think that’s going to be twofold. I think you’re going to continue to see the intellectual property issues attended to generative AI litigated. I think that’s one area that’s inevitable. I think the other area that we’re really going to start to see, and we already are seeing an uptick in litigation, is in the use and deployment of generative AI by companies.

Let me frame it this way. As companies attempt to take advantage of the promise of generative AI, they’re going to, they already have, and they will continue to deploy generative AI tools, and generative AI system, more advanced systems in terms of machine learning, and generative aspects of AI in their businesses. I think we’ll see a steady increase in use -- and some folks would say misuse -- of AI.

It’s trickling out where plaintiffs allege that the business or the entity has done something wrong using AI. I think that the claims will start to become in addition to the AI that you’re using has done something wrong, and I think liability will flow back to, or at least that the theory of liability will flow back to, the business. I think the deployment of these systems can and will create an environment that is ripe with test targets for plaintiffs’ attorneys. This will be particularly highlighted because of the attended challenges related to data and protecting data. Data is a key part of why and how a lot of these generative AI systems are valuable. There’s inherent risks that are going to be out there in the use of these products and businesses will have to understand the technology, understand those risks, and mitigate those risks or be subject to an uptick in litigation.

About the Author

You May Also Like