Private Clouds: Tough To Build With Today's TechPrivate Clouds: Tough To Build With Today's Tech

Missing standards, scant automation, and weak management tools make this next step beyond server virtualization a challenge.

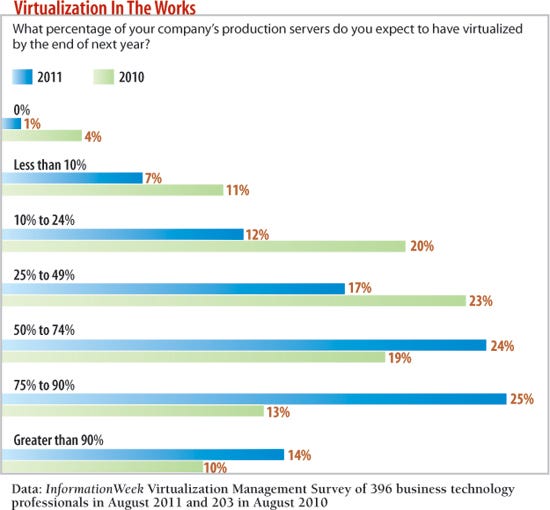

Let's not spend time debating whether fully virtualized data centers will become the norm. They will, and sooner than you may think. You have bigger challenges than how soon you can get 50% or 75% or 99% of your servers virtualized.

Almost half of companies buy into the broader vision of a private cloud, finds our InformationWeek 2011 Virtualization Management Survey of 396 business technology pros. By private cloud, we mean an internal network that combines compute, storage, and other data center resources with high virtualization, hardware integration, automation, monitoring, and orchestration. But getting there with today's technology will be tough. We'll look at the range of problems IT faces, such as multivendor environments, limited automation, and still-emerging technology and standards.

Standards are scarce indeed, making every purchasing decision dicey. You must understand how every component interacts with every other component, but since extensive server virtualization has increased operational complexity, this can be an extraordinarily difficult thing to get your arms around. IT teams looking to conventional network and systems management products for help are finding that these expensive tools are inadequate to the task at hand.

"The only savings realized from virtualization is fewer physical servers," says one respondent to our survey. "Costs have increased via more expensive servers with bigger I/O and more memory, added cost of the hypervisor, and a much more difficult time to resolve problems when they occur."

VMware is still the go-to vendor when IT organizations talk enterprise-class server virtualization.Only 36% of the respondents to our survey have secondary hypervisors in use at their companies. But Citrix and Microsoft are closing the gap.

Asked to rate the importance of a dozen virtualization features, survey respondents cited high availability as No. 1 and price a very close second. Both Microsoft Hyper-V R2 and Citrix XenServer offer high-availability features with a reasonable price tag. VMware also offers high availability in its entry-level packages, except that it doesn't bundle features like Distributed Resource Scheduler, for machine load balancing, with its low-cost vSphere Essentials, making it an incomplete offering.

Other highly valued factors include live virtual machine migration (available from all major vendors), fault tolerance, load balancing, and virtual switching/networking. Citrix and Microsoft recently cozied up to Marathon Technologies to provide fault tolerance for their platforms.

The features VMware offers that the others don't--like Storage DRS, which load balances data store I/O, and Storage vMotion--land in last place in our survey. VMware's decision this year to increase its price beyond a certain virtual memory allocation was met with such howls from customers that VMware raised the limit--but that move only delays a price increase that could drive companies to consider alternatives. If it's bells and whistles like Storage DRS and Storage vMotion that VMware expects to justify higher licensing costs, our survey respondents aren't buying it. "With steady improvements to Hyper-V and Xen, and Oracle's integration of Virtual Iron into their VM product, we have lots of alternatives to consider," says one respondent.

However, mixing production hypervisors almost guarantees that companies won't have a unified, automated disaster recovery scheme. And it can require some deep expertise if you want one policy to govern all of your systems, a common goal.

Master Disaster Recovery Is Elusive

At first, it surprised us that automated disaster recovery was rated as the third-most-important feature. Currently, only VMware offers a reasonably mature product in this area, through Site Recovery Manager. Even that product, while it does provide an integrated "runbook" that can provide the status of some data center operations that you'd need to know during a disaster, won't fully automate disaster recovery without a serious implementation and integration effort.

Then it hit us: Rising demand for automated disaster recovery speaks volumes about what IT expects from next-generation virtualization. VMware's offering tries to address the big picture of disaster recovery automation by integrating tightly with every component in the DR stack, starting with storage replication. VMware's goal is lofty: to provide fully managed and automated failover to a secondary site. That includes managing the state of replication, the virtualization infrastructure, connectivity, and the myriad other variables involved in a successful failover. VMware hopes to improve the already excellent value proposition of virtualization for disaster recovery and business continuity by increasing automation.

Unfortunately, a big problem is the lack of standardization. While VMware, Citrix, and Microsoft all have APIs for their diverse virtualization portfolios, a lack of standards and protocols means it's difficult to integrate across hardware and software API boundaries.

Worse, there's no clear road map for an automated, pervasively virtualized infrastructure. Who makes your hypervisor? Storage arrays? Servers? All of these vendors inject additional complexity and make supplying reliable cost numbers for automation projects nearly impossible. Setting up true automation becomes a nightmare of unbudgeted expenses, internecine warfare, and unforeseen roadblocks as software development and data center professionals try to integrate diverse hardware platforms, hypervisors, and application stacks into a resilient and, in theory, hands-off infrastructure.

The good news is that vendors are starting to build in software API mechanisms to make automation possible. The bad news is that the cost of developing a fully automated infrastructure remains outside the reach of all but the largest companies, even if you can afford centralized storage, virtualization, load balancing, and high availability. Still, automation is an essential element of the ultimate goal: private clouds.

Private Cloud Drives Virtualization

Private clouds promise an agile data center, where workloads can be moved around to different physical servers, storage, and networking gear to meet changing demand. And you can't have a private cloud without virtualization, since the private cloud architecture requires breaking free from physical network and infrastructure constraints. Forty-eight percent of our survey respondents are working toward private clouds, and another 33% are investigating them.

IT vendors are introducing products aimed at private clouds, expanding virtualization's value. We see this innovation in interconnects, such as the PCI-SIG's Single Root IOV protocol for linking virtualized devices; in processors, with Intel VT-x and AMD-V; in storage, with hybrid cache mechanisms; in storage controllers with robust software APIs; in applications, with cloud delivery mechanisms, distributed processing, and encapsulation; in networking, with iSCSI/ FCoIP; and in wide area networking, with Virtual Private LAN Service and Cisco's Overlay Transport Virtualization.

This innovation should be exciting for IT--and depressing. While the vendors are solving one problem of implementing private cloud, no one offers a good way to run this larger infrastructure. That shortcoming also makes it harder to spell out the value to the rest of the company.

"Upper management, including within IT, has not fully grasped the concept of virtualization," says one survey respondent. "Requirements for properly implementing a private cloud still get questioned on an ROI-per-item-purchased basis, instead of the relative increase in capability the purchase would provide for the users of the cloud."

The top driver for survey respondents who are working toward private clouds is improved application availability. We see a similar priority in another part of our survey, the measures of virtualization success, where application uptime and efficiency rate highly. The message is clear that this flexible data center needs to provide greater reliability--employees want unrestricted access to applications from anywhere and at any time.

Operating savings is the No. 2 driver for private clouds (chosen by 37%), which isn't a big surprise. But it did surprise us that automated provisioning of resources came in as No. 3, selected by 31% of respondents. Honestly, should we be empowering finance to spin up eight or nine Oracle servers whenever it feels like it? We don't think so.

Meeting variable workload demands is a driver for only 19%. At the bottom of the list of drivers for private cloud builders are compliance (8%) and chargebacks (3%).

Not All About Servers

Survey respondents ranked their virtualization priorities in this order: server, storage, desktop, and applications, with network virtualization far behind.

Storage virtualization, in use at 57% of respondents' companies, has clear cost benefits. Savings come via deduplication, tiering, and functions such as hardware-side disk snapshots. But the real advantage of storage virtualization is that it finally gives IT organizations a fighting chance of managing their big, messy piles of storage as one resource, with policy-based tools. It's essential to tiered-storage strategies.

Application virtualization, in use at 42% of respondents' IT organizations, is a big umbrella. It can refer to application delivery services such as XenApp, or application encapsulation and abstraction delivered via desktop virtualization (like VMware ThinApp). Or it can be a combination of the two approaches. Regardless of type, application virtualization accelerates app deployment, centralizes application management, and facilitates private cloud access.

Desktop virtualization is in use at 44% of respondents' companies and under evaluation at another 42%. IT teams are struggling with the "bring your own device" movement, and serving desktops or applications from a centralized repository all but eliminates the BYOD security blues, because data doesn't reside on the machine.

Just 10% of respondents' companies make extensive use of I/O virtualization and network virtualization-- 34% have no plans for the former and 44% have no plans for the latter.

Look for these percentages to rise substantially over the coming year, because network and I/O virtualization will relieve a number of longstanding pain points. The higher density and fluidity of virtual infrastructures mean they demand network topologies that can be provisioned dynamically in the same way VMs are--without touching physical hardware.

The main problem is the lack of standards and uniformity. While most I/OV vendors have settled on PCI as a transport mechanism, backed up by SR-IOV for virtualized adapters, others are using InfiniBand and 10-Gbps Ethernet with proprietary virtual breakouts. The same proliferation abounds in pure network virtualization offerings. If server virtualization still suffers from a lack of standards, I/O and network virtualization are even more embryonic.

Standards In Play

It's unfortunate that there isn't more progress on standards. However, two have emerged.

The Open Virtualization Format is a nonproprietary way of storing virtual machine disk files. VMware, Citrix, and Microsoft use proprietary formats for virtual machines, but their products can read and import OVF files. OVF provides a convenient format for moving machines to a different platform by exporting them to an OVF package first.

The other fledgling standard is VMAN, a virtualization management standard developed by the Distributed Management Task Force, a virtualization standards body with broad industry support. VMAN purports to simplify virtualization management by abstracting common management tasks via a single protocol. However, we have yet to see usable VMAN-based products, despite the launch of the standard in 2008. Maybe it's time for enterprise IT groups to start applying pressure.

Management Trends

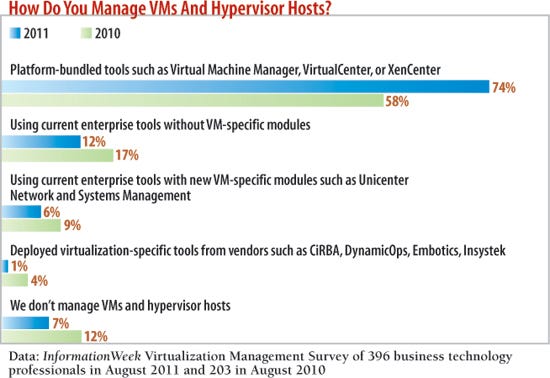

With early virtualization deployments, IT shops tried to integrate virtualization into their existing management platforms. No longer.

From last year to this year, our survey reveals a 16 percentage point jump--to 74%--in respondents using built-in or platform-bundled tools for virtual machine and infrastructure management.

It's difficult to pinpoint the exact reason for this shift, but we suspect it's because it's hard to integrate virtualization management into the previous generation of network and system management suites. While many of those suites do offer management information bases to interact with major virtualization platforms, new hypervisor features come out so quickly that even large management vendors get behind in revising their core products. Delays drive admins back to the virtualization vendor's management tools, and they tend to stay there.

Companies want automated management, our survey shows, so there's a big opportunity for whichever vendor delivers. Failure recovery is still as labor-intensive as it has always been. Server virtualization has, technically, made automation easier, but as we've discussed, it's also made things more complex.

And that complexity only accounts for server virtualization. If you start to add virtualized storage, network, and I/O, the complexity of a seemingly simple failover operation can be enormous. Imagine trying to write an automation script that can talk to one or more storage virtualization controllers and one or more hypervisor platforms; can check the performance of multiple applications; and can communicate with an I/O controller. Just making decisions based on such a large amount of information is difficult; now try to incorporate that code into a legacy network and system management platform, and maintain and test it through moves/adds/changes and hardware and software updates.

Add to this mess predictive analytics for IT operations, which depends on a good network management tool and event database, and the scenario becomes even more complicated.

Make The Business Case

IT organizations are using a mix of benchmarks to gauge the success of their virtualization deployments. More than half of our survey respondents, 55%, cite cost savings, but we also see some of the private cloud drivers being used to measure the success of virtualization--like improved application uptime (39%) and improved operating efficiency (39%).

"Clients are less interested in server virtualization and more interested in services automation," says an architect for a major IT integrator. "Business doesn't care about the technology. They want to know the business value in terms of go-to-market advantages."

One striking survey finding is that 61% of respondents say they don't measure return on investment, relying instead on gut feeling. So how does that work exactly?

Actually, that finding is a good indicator of how deeply embedded virtualization is in the modern IT mindset. Companies aren't measuring ROI for server virtualization because it's the industry standard. When was the last time anyone asked for ROI figures on a firewall?

Yet while server virtualization may be a no-brainer, the same can't be said for I/O, network, and desktop virtualization. Even storage virtualization, while growing, isn't a clear pick for every company.

Remember when you started virtualizing your server infrastructure eight or so years ago to get the basic benefits--consolidation, flexibility, and power savings--more or less automatically? In contrast, the next moves, like automated disaster recovery and the flexibility of private clouds, are immensely difficult to engineer, even with best-of-breed systems.

The next wave of virtualization benefits could prove to be more dramatic than the first, but there's a lot of complexity to sort through, so don't expect them to come without some sweat.

All Articles In This Cover Story:

InformationWeek: Oct. 31, 2011 Issue

Download a free PDF of InformationWeek magazine

(registration required)

About the Author

You May Also Like