Researchers are using storytelling to teach a robot how to be more ethical and potentially put to rest fears of dangerous artificial intelligence agents taking over the world.

Google, Tesla And Apple Race For Electric, Autonomous Vehicle Talent

Google, Tesla And Apple Race For Electric, Autonomous Vehicle Talent (Click image for larger view and slideshow.)

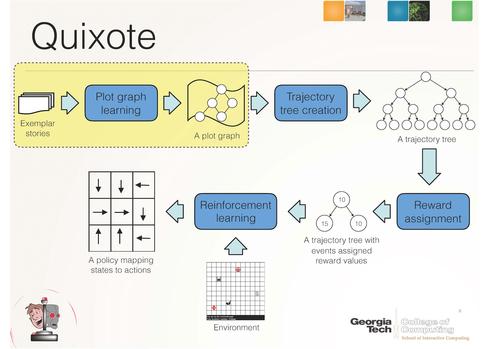

What if nearly anyone could program an artificial intelligence or robot by telling it a story or teaching it to read a story? That is the goal of Mark Riedl and Brent Harrison, researchers from the School of Interactive Computing at the Georgia Institute of Technology, with their Quixote system, which utilizes storytelling as part of reinforcement training for robots.

Not only would story-based teaching be incredibly easy, it promises to solve many of the fears we have of dangerous AIs taking over the world, the researchers said. It could even lead to a real revolution in robotics and artificially intelligent agents.

"We really believe a breakthrough in AI and robots will come when more everyday sorts of people are able to use this kind of technology," Professor Riedl said in an interview with InformationWeek, "Right now, AI mostly lives in the lab or in specific settings in a factory or office, and it always takes someone with expertise to set these systems up. But we've seen that when a new technology can be democratized new types of applications take off. That's where we see the real potential in robots and AI."

Riedl and Harrison also say they believe that if you want to teach an AI to be more ethical, this is a great path, because they've actually been able to change "socially negative" behavior of a robot in lab settings.

One common way of programming robots that interact with humans is called reinforcement learning. Much like you give a dog a treat when it learns a new trick to reinforce the learning, you can program an AI to do the same thing. However, reinforcement training can sometimes lead an AI into taking the simplest path to the "treat" without considering social norms.

For instance, if you asked an AI agent to "pick up my medicine at the pharmacy as soon as possible," the agent might steal the medicine from the pharmacy without paying for it because that is faster than waiting in line to check out. However, in a human society, we agree to wait in line and pay even though that is a slower path toward the goal.

[ Will evil AI do more than skip the line? Not if Elon Musk has a say. Read Elon Musk Gives $10 Million In Grants To Study Safe AI. ]

"So [in the case of it stealing the drugs] we had something else in mind when we asked it to do that, and it didn't work as intended," said Riedl, "We wanted a way to explain something in natural language. And the best way to do that is in a story. Procedural knowledge is tacit knowledge. It is often hard to write down. Most people can tell a story about it though."

That's where Quixote can help. It breaks up the "treat" into smaller treats as it follows the steps in a story. So, for instance, a person could tell the agent a story of how they get their medicine in a pharmacy and include steps like "waiting in line" and "paying for the medicine." The agent is then reinforced to hit the "plot points" in the story.

"So, in the beginning we're going to tell it a bunch of stories," Riedl explains, "Then the system builds an abstract model from the procedure of the story. And then it uses that abstract model as part of its reward system. Every time it does something similar to what happens in a story, it gets a bit of a reward. It gets a pat on the back. In the long run it prefers the pats on the back to the fast reward."

How many and what kinds of stories you tell it depend on what the agent is tasked to do. If it is a relatively simple robot that is asked to do simple tasks, you would tell it just a few stories about what it will need to do. But if you wanted a robot to interact and behave more like a human, everything is available -- from comic books to novels and any other kind of story. Of course, that is a long way off.

"Our goal is to get things as natural as possible," said Riedl. "Right now, the system has some constraints. We have to ask people to talk in simple ways, basically talk to it like a child."

However, agents can sometimes struggle with language found in books. Sarcasm, Riedl points out, is notoriously difficult for computers to understand. But as natural language reading gets sophisticated, the complexity of tasks and of the AI itself can increase.

For now, Riedl and Harrison are working mostly in a grid world to teach AI, but will hopefully move to real-world environments in the future. The potential is to help humans interact with robots in a much more "human" way, particularly in programming them to do a task. In the past, robots have been trained to do a task by watching a human perform that task, but that requires the human to understand the exact setup and capabilities of the robot. Quixote allows agents to be programmed without the human knowing where the robot will be or what its capabilities are.

"When you tell a story about a task, a lot of times you are doing that without knowing the capabilities of the person doing the task," Dr. Harrison said. "This allows someone not familiar with the robot to still tell it do something. You don't have to be present or intimately familiar to describe the task."

For instance, you don't need to know the layout of the pharmacy or that it is on the second floor. The agent will create its own path to fulfilling the task.

Being able to teach a robot a task without complicated programming would have significant potential in the enterprise as well as the consumer world. And for those who think AI will psychotically destroy humans due to coding errors, it may be comforting to know that this style of programming could alleviate many of the unintended consequences of asking robots to complete certain tasks. It could also be the key to humans and robots interacting happily in the workplace.

Does your company offer the most rewarding place to work in IT? Do you know of an organization that stands out from the pack when it comes to how IT workers are treated? Make your voice heard. Submit your entry now for InformationWeek's People's Choice Award. Full details and a submission form can be found here.

About the Author(s)

You May Also Like