A Shaky Virtual Stack

Our <i>InformationWeek</i> 2013 Virtualization Management Survey shows automated service delivery is the future -- unless you want to find yourself managing cloud providers.

As we enter 2013, the technology foundation of our businesses is changing dramatically as every layer of the network and service delivery stack can now be abstracted. The final holdout of genuinely "hard" hardware was the network, but even that's going virtual. When we asked about software-defined networks in the InformationWeek 2013 Virtualization Management Survey, we found more interest in SDNs than in our SDN-specific survey just a few months earlier.

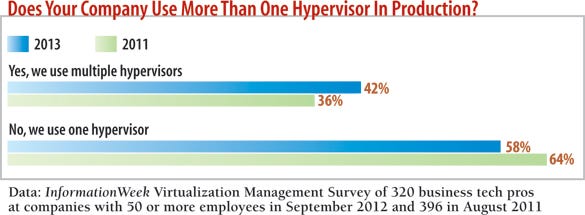

Higher up the stack, 42% of the 320 business technology respondents to our virtualization survey say their companies use multiple hypervisors, up from 36% in August 2011, and 11 of the 13 hypervisors we asked about are in use by more than 10% of respondents, compared with eight hypervisors two years ago. In this year's InformationWeek Global CIO Survey, 92% of respondents say they plan to increase their use of server virtualization, even ahead of expanding business intelligence (85%) and improving information security (84%). In our InformationWeek 2012 IT Spending Priorities Survey, improving security, increasing server virtualization, and upgrading the network and storage infrastructures came in atop a list of 16 projects competing for budgets.

However, the end goal of all of this virtualization--flexible, service-oriented IT that can respond quickly to business needs--is still a precarious proposition because it requires extensive automation and orchestration. That's a big worry for IT teams faced with coaxing performance out of highly virtualized, highly fragmented stacks using management technologies inadequate to the task.

In fact, confidence in next-generation virtualization technologies is low among many IT professionals we work with, even as use rises. Why? For one thing, the hypervisor wars aren't over--they're escalating. While VMware remains king of the hill in terms of functionality and market share, Microsoft's Hyper-V continues to gain momentum, with nearly one-third of survey respondents citing some level of use. Improvements in Windows Server 2012 will keep that growth going. Citrix and Oracle are holding their own, and we're only talking server and desktop virtualization here. Never mind the number of hypervisors from vendors competing in virtualized storage, network, I/O, and applications.

Research: 2013 Virtualization Management Survey

Get the full report on our 2013 Virtualization Management Survey free with registration.

Get the full report on our 2013 Virtualization Management Survey free with registration.

This report includes 34 pages of action-oriented analysis, packed with 28 charts. What you'll find:

Trended adoption levels for 13 hypervisors

Why virtualization must be core to compliance efforts

This isn't all bad for IT. Competition has driven core virtualization technology costs down by about 75% since the debut of server virtualization in the early 2000s. Even storage and application virtualization have started shedding their price premiums. A proliferation of new products and vendors also fosters innovation.

But hypervisor fragmentation has a killer downside: a lack of standardization that makes integrating dissimilar silos--an absolute requirement if we want to get to automated service delivery--nearly impossible. A main goal of automation is self-service, empowering business users to provision the IT assets needed for a given project. That goes beyond just server cycles; if the whole stack isn't working in unison, you don't have efficient resource use, self-healing, improved application availability, better power management, preplanned responses to contingency scenarios--all the stuff that makes automation worth the cost and effort.

People Power

One often overlooked issue is that virtualization technology has changed so quickly that only the largest and most progressive companies have the skill sets to maintain it. Respondents to our survey cite a moderate to high degree of difficulty in training or sourcing the professionals necessary to solidify the stack. The traditional IT team structure is changing, too, as we deal with networks inside servers, applications rolled from virtualization management platforms, and server teams that provision their own storage.

The status quo can even be downright obstructionist if teams fight over who will control which resources and own new responsibilities.

Organizational difficulties aside, we don't yet have the management tools to bring next-generation virtualized networking, I/O, application, and storage technologies under one, or even two or three, panes of glass. Unified management of all these virtualized resources is a bridge our vendors must cross: A large, distributed enterprise infrastructure might mix three types of virtualized storage arrays, two different server hypervisors, a virtual desktop connection broker, an application virtualization controller, an I/O virtualization controller, and an SDN appliance. That's potentially nine management interfaces, not counting Windows- and Linux-specific agents. Without understanding at each and every layer how an application is delivered, it's almost impossible to quickly diagnose problems and maintain quality of service.

Unfortunately, the present market is so diverse and so nonstandard that managing such a large infrastructure leaves little time to understand how applications on virtualized infrastructures are being delivered. This is an important point because IT teams are increasingly driven by the concept of business services, where compute, network, security, and storage are packaged and delivered on demand. When we analyze the state of virtualization, what we're really talking about is commoditization of an application or service, without care for the underlying infrastructure--freedom to distribute each and every service exactly where and when it's needed, with no wasted resources. This idea of total divorce from the physical at every level of the data center is what's giving rise to the latest virtualization paradigm of policy-driven, cloud-based automated service delivery. CIOs need to take this vision seriously if they plan to help business units implement new initiatives fast and match the "instant-on" computing resources offered by public cloud providers like Amazon and Rackspace.

Speaking of cloud, vendors including Cisco, Citrix, Microsoft, and VMware are trying to help in-house IT compete with their new flagship private cloud offerings. The vision: Put a bunch of stuff in a room and let that stuff run your data structures, desktops, and application stack. Just tell it what you want (read: policies) and turn it loose. The infrastructure automatically operates at 100% efficiency and is always up. When it gets overcrowded with requests, it tells you that it needs more hardware, at which point you throw in another server or whatever, and the gear is dynamically absorbed and deployed where needed to deliver on performance guarantees.

This scenario is a long, long way from current private cloud reality, where elasticity, scalability, and organization of services are a direct result of IT elbow grease, not automation. But it's where we need to be aiming. In fact, policy-based service delivery has been the end goal of most big hypervisor vendors since day one. But can they pull it off?

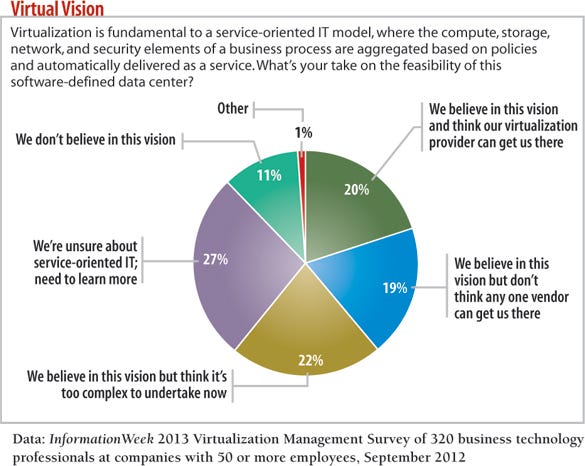

Respondents are skeptical--11% flat out say they don't believe in this vision, and 27% confess ignorance. Of those respondents who have a positive opinion, only one-fifth think that a single virtualization provider can get them there. That's not exactly a vote of confidence in products, such as VMware's vCloud, that purport to deliver service-oriented IT in a single suite. Fully 41% of respondents say it's either too complex to attempt at present or that it will require multiple vendors--an opinion that we're inclined to agree with given the fragmented market.

As to why IT's driving forward anyway, operational flexibility and agility ranked as the top driver for server virtualization and physical-to-virtual conversion among survey respondents in our 2011 and 2013 surveys.

Never let it be said our respondents aren't an optimistic bunch.

Automation Conundrum

To build a highly available infrastructure that responds in real time to changing conditions requires a few things. First, each level of the stack needs to communicate with the other levels. Second, you need policies to respond to scenarios and contingencies that may affect service delivery. Say a superstorm knocks out your East Coast offices, to use a painfully real-world example. Can your employees in Chicago still get to critical business data and applications?

But most important, policy-based service-delivery systems depend on automation. Without automated application delivery workflows and orchestration techniques, IT can't hope to meet service-delivery goals.

To that end, we saw healthier-than- expected levels of automation processes interacting with the 15 IT systems we asked about in our survey. At least 80% of respondents cite some level of automation for server virtualization, application virtualization, application deployment, application monitoring, backup and recovery, and network management. Analytics and data warehouses, at 74%, and directory services (76%) fell just shy.

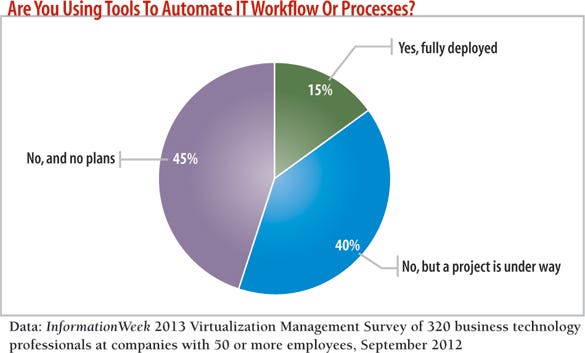

That's encouraging, but we know from experience that it's difficult to demonstrate a strong ROI for automation projects, especially those that don't involve mission-critical applications, which helps explain why only 15% of respondents to our survey have fully deployed automation suites vs. 45% with no plans. Without a common language, the level of manpower involved in automating service delivery schemes is simply too expensive for the current market to bear for anything except the most mission-critical applications.

Another reason for weak adoption is the state of today's data center automation systems, even mature ones like those from BMC, CA, and IBM/Tivoli. Vendors essentially say: "This product can, with a huge investment of time, energy, and money, automate your systems to a certain extent." The value proposition isn't compelling enough unless the service in question is so critical that downtime would mean a massive monetary loss.

The biggest barriers to adopting automation systems, cited by 81% of survey respondents, is no perceived benefit to IT (38%) or decision-makers (43%). Others cite inadequate skill sets (30%), the expense (21%), and integration difficulties (14%).

We think the big reason IT teams are reticent to jump into service-oriented IT boils down to one fact: There are so many moving pieces at so many different levels of the stack, the promise of integrating them with one another and a service-delivery engine seems like an impossible dream. As IT veterans will tell you, the devil really is in the details. What do you mean these modules can't talk with one another? What do you mean we need a specialized programming team to make that happen? The more moving pieces involved, the greater the likelihood of running into a problem that just can't be solved, at least not at any reasonable cost. Multiply this challenge by the number of systems involved in delivering IT in a service-oriented way, and it's no wonder decision-makers are reluctant.

When IT teams are rolling out automation and orchestration systems, they're doing it where the dollars are: business continuity and disaster recovery, automated performance tiering, and dynamic performance management for enterprise applications. All of these categories have a clear ROI: business continuity and disaster recovery for reasons of business survival and regulatory compliance. Automated performance tiering because there's a clear capital expense savings associated with using equipment better. Orchestration because it saves on manpower. And dynamic application monitoring because of the risk of losing sales or suffering bad PR if customer-facing services go south.

The Road Forward

As you move toward a fully virtualized stack, don't be too quick to discard established IT organizational structures. Rigid delineation into service delivery teams with specific responsibilities came into being for a reason. One big driver: compliance. Delegation-of-authority initiatives demand accountability, and that demands data structures. Two-thirds of our respondents say they are subject to some form of regulation, and many also say server, storage, desktop, and application virtualization are important to maintaining that compliance.

To ensure role flux doesn't adversely impact security, use virtualization-specific management tools to regulate, monitor, and log infrastructure changes. Embrace the role-based access control capabilities native to modern virtualization platforms, and use configuration profiles and policy management tools to confirm that controls extend throughout the stack.

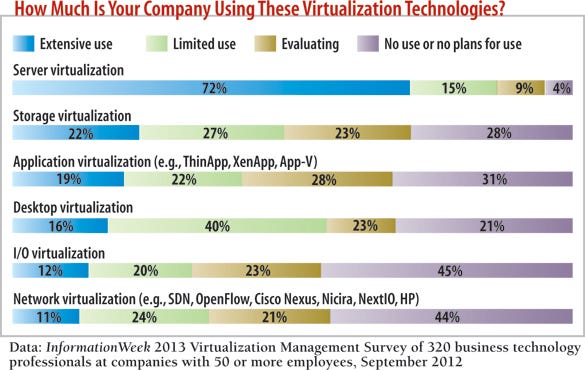

In addition, select supplemental I/O, application, and network hypervisors judiciously. While these technologies aren't being virtualized as fast as core resources such as storage and server, anywhere from one-fifth to nearly one-third of respondents are actively evaluating them. If we aggregate the "evaluating," "limited use," and "extensive use" categories, more than half of respondent companies are adopting the entire beautiful, fragmented virtualization stack, extending from storage and network right on up through servers, desktops, and applications.

Yes, the "extensive use" category rankings are somewhat low. But for that, vendors have only themselves to blame. Data centers are suffering from the kinds of problems that these technologies could solve, if only IT felt comfortable that they could integrate everything.

This statistic brings into sharp relief a problem we've already discussed but that bears repeating--you must make all these moving pieces work together in a way that is meaningful to service delivery. You can't depend on vendors here; the industry is handicapped by a lack of common hypervisor communication standards.

While VMware has continued to revise and update its proprietary communications APIs, the adoption rate by other vendors is pitiful. When looking at storage-assisted virtualization operations, for example, only a few storage arrays are fully API compliant; we're looking at you, FalconStor and Hewlett-Packard.

Our survey also showed a stall in storage virtualization use. Part of this is, we think, due to virtualization features being absorbed into standard array feature sets. Things such as deduplication, automated performance tiering, and dynamic I/O allocations are no longer found only in expensive virtualized arrays; commodity storage vendors are now offering such features on a variety of inexpensive equipment.

Finally, while Oracle and VMware have attempted to supplement network and I/O virtualization capabilities with their acquisitions of Xsigo and Nicira, respectively, the only external network equipment with direct hypervisor integration that we're aware of remains the Cisco Nexus series, which is nearly 4 years old.

In short, don't expect integration without extraordinary elbow grease.

We admit to being floored by the number of respondents using network virtualization--11% say they're using it extensively and 24% on a limited basis. We're guessing the "limited use" category is data center-only deployments, but an aggregate 34% adoption rate of what is essentially an early-stage technology speaks extremely well for the future of SDN.

It's clear why respondents see value in network virtualization--configuring services across disparate devices is extremely difficult without vendor-specific network management products. Network virtualization, therefore, figures strongly in compliance initiatives and can pay for itself via faster incident resolution and new deployments and lower management costs.

Network virtualization also has a huge leg up on other virtualization technologies for one reason: OpenFlow. By embracing a standard network virtualization protocol stack, vendors have made it substantially easier for diverse devices to interoperate. Other virtualization vendors could take a lesson.

Go to the sidebar:

Automation Demands Tighter VM Security

InformationWeek: Nov. 12, 2012 Issue

Download a free PDF of InformationWeek magazine

(registration required)

About the Author(s)

You May Also Like

How to Amplify DevOps with DevSecOps

May 22, 2024Generative AI: Use Cases and Risks in 2024

May 29, 2024Smart Service Management

June 4, 2024