How To Build A Modern Data Center

Borrowing ideas from Google, Facebook, and Microsoft, Vantage is building three wholesale data centers in Santa Clara that focus on energy efficiency.

Google's Oregon Data Center

(click image for larger view)

Slideshow: Google's Oregon Data Center

A new type of data center is going up in Santa Clara, Calif., built by Vantage, a specialist in creating modern computing space. The facility's design and energy use is only one step behind the leaders in the field, Google and Facebook.

Unlike Vantage, however, Google and Facebook tend to build their data centers in remote, optimum locations like the Columbia River Valley, where power is cheap, or in central Oregon where the cool nights and high desert climate aid in the fight to keep densely packed computer equipment from overheating.

Facebook built its most recent data center in Prineville, Ore., east of the Cascade Mountains and next to a river. That means it's got lots of water for cooling, with no need to use electricity-powered refrigeration units, called chillers. The ease with which the water evaporates as the ambient air passes through a sprayed mist or over water seeping across a membrane means there's no need to install chillers for even the hottest days of summer. The process of water evaporation cools the air about 15 degrees. After it reabsorbs data center heat, it is dumped from the building.

On April 19, BendBroadband likewise announced it had just opened a 30,000 square foot data center, the Vault, in Bend, Ore., taking advantage of the same cooling principles. It also put solar panels on the roof to generate electricity from the high desert sunshine.

Unlike Facebook and BendBroadband, Vantage is building three "wholesale" data centers on an 18-acre campus in Santa Clara, a city in the heart of the Silicon Valley. Vantage faces summer days that are too hot to rely only on outside airflow. It will use water-cooled air, as Facebook does, as much as it can--but it can't rely on that technique by itself.

In addition, Vantage's data center, a short distance off Highway 101, wasn't built to support an Internet application, like Facebook. It's designed to run enterprise transaction and other mission critical systems. Vantage must provide service-level agreements (SLAs) to customers that leave no margin for temporary slowdowns due to a heat wave. It's got chillers installed for use in summer heat. Without them, it has a low Power Usage Effectiveness rating of 1.20, matching what Google says is its state of the art. With the chillers running, its PUE rises to 1.29. A PUE is a measure of the power delivered to site versus the amount actually used in computing, telecommunications, and other primary services.

Facebook recently claimed the lowest PUE for a data center at 1.07 in an event April 7 at its Palo Alto headquarters.

Jim Trout, the CEO of Vantage, is an enthusiast of data center designs. He talked in an interview about how a modern data center can drive down by one third the total energy consumed when compared to older data centers. (Facebook claimed Prineville represented a 38% savings over its previous data center construction.) Trout is considered a rising star in the field, having previously been president of the wholesale data center company CRG West, which since has become CoreSite, a Vantage competitor across town. He has also been a senior VP of technical operations at Digital Realty Trust, another wholesale data center space supplier in Santa Clara and elsewhere.

Vantage was financed with an undisclosed amount of money by Silver Lake Partners, partly to give Trout a chance to build more state of the art data centers. Vantage's Walsh Avenue campus, once its second and third phases are done in about three years, will represent a $300 million investment. Vantage held an event on April 22, Earth Day, to describe its latest design initiatives. David Gottfried, founder of the U.S. Green Building Council, was the lead-off speaker.

Trout said the biggest savings come from how a data center brings power off the grid and into its building, with another set of savings coming from the design of airflow through racks of servers. He said he thinks Vantage has matched Google and Facebook in its ability to distribute power to the data center sections, but he also said Facebook excelled in its implementation of airflow through its server racks.

"They took the servers out of the boxes, out of the sleeve, disentangling them and allowing greater ease of air movement through a 42-unit rack," he noted, calling it a major innovation.

He doesn't have that option. "We are not touching the servers and routers," he said. In a wholesale data center, Vantage provides plug and play space, with power and cooling. The customer installs the equipment--servers, routers, and switches.

<

<

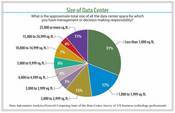

Analytics Slideshow: 2010 Data Center Operational Trends Report (click for larger image and for full slideshow)

A site's power distribution features are not something that just any company can go back and revise to save energy. They have to be built into the original design. Vantage's Walsh Avenue campus is supplied with electricity through an on-site, 50-megawatt substation with two transformers. The substation sits on a "self-healing" transmission ring with other substations located on it. The Vantage substation could malfunction and the other substations would still ensure that the site got power it needed, he said.

Most enterprise data centers lack a 50-megawatt substation nearby. In one case, an Internet entrepreneur related how his servers went down at his co-location facility because the site was strapped for power and couldn't get more. All seemed fine until a co-lo employee to the nearby lunchroom one day, pushed down the toaster button, and the data center went dark.

Trout said that's why most data centers never tap more than 80% of the power being made available to them. They want a buffer, a margin of error, in case of an unanticipated toaster event. At the Vantage site, however, the "robust design" calls for the substation to deliver the power needed without a buffer. Trout said the data center is "using 1.0 of the electricity it brings in the building, instead of .8."

In addition, the power is stepped down from transmission line voltage at the substation to 480 volts as it's carried to different sections of the data center, instead of the 220 or 110 that would be more typical. Facebook did the same thing, Trout said, delivering 480 volts close to the servers because the technique results in less transmission line power loss. Under normal circumstances, the delivery of the power consumes a percentage of the resource due to resistance in the line. The higher the voltage, however, the less the loss. Voltage isn't literally a measure of an amount of electricity so much as an indication of the pressure behind it.

Once it's close to the server racks, it's stepped down to 12 volts and passes through a Vantage innovation, an insulated gate bipolar transistor (IGBT) unit. It's unusual to use a IGBT at this stage because it's actually a specialized semi-conductor that can allow alternating current to pass through it. If the AC power disappears, it allows a direct current supplied by batteries to instantly replace the loss.

The IGBT device acts as the key component of the Vantage data center's uninterruptible power supply. It both conveys normal AC current and can convert direct battery current, DC, into instant replacement alternating current. It has to perform this function in case of a power outage for only for 5-8 minutes, the amount of time the batteries can sustain the data center load, but that's enough to get the backup generators fired up and running, Trout said.

Neither Facebook nor Google use the IGBT approach, to the best of his knowledge. Each data center use some form of uninterruptible power supply and typically transmits 88% to 90% of the electricity coming into the data center through it. It's necessary for the UPS to sip power from the normal supply to insure that its batteries are fully charged. The Vantage IGBT approach gets 96% of the power coming through the UPS, he said.

Although Vantage is trying to use all the power coming onto its premises, it has through its design the ability to get more power from the substation. Trout called it "2n redundancies all the way to the server" or twice the amount of power needed can be delivered by the system. If one transformer in the substation dies, for example, the surviving transformer can still deliver all the power needed.

Likewise, if smaller transformers die in the step-down process, other transformers kick in to keep the servers running. It's a deliver just-in-time strategy, instead of keep (and waste) a power reserve.

The building of this distribution into Vantage's first whole sale data center on its campus, completed in mid-February, will be used again in the second phase now underway and a third still to come. Trout said the big step energy savings are occurring now, with examples like Amazon.com and Microsoft, as well as Google and Facebook, paving the way.

There will be more to come, but the art of data center design has finally gotten around to addressing its major sources of wasted power. "We may move one day from 1.07 to 1.06 or even 1.05," he said. But there will be no more announcements of 38% power savings in a new data center versus the one that came before it, he said. Today is the time for dramatic gains. Tomorrow's gains will come much harder.

About the Author

You May Also Like