Senate Hearing and Big Tech’s Social Media Responsibility

Heated calls for action on child protection fueled the Senate Judiciary Committee’s hearing with the heads of Discord, Meta, Snap, TikTok, and X.

The protection of children from online dangers and related real-world harms got intense attention in Washington recently -- at least for one day. Last week’s Senate Judiciary Committee hearing called for testimony from CEOs of X (formerly Twitter), Meta, Snap, Discord, and TikTok on what is being done to combat exploitation of children via these social media platforms.

In an era when organizations are quick to examine visibility into their IT infrastructures for the sake of cybersecurity, accessibility, and chain of custody of assets, is it also time to discuss further awareness of and responsibility for malice enacted through technology?

A declaration on the Senate Judiciary Committee’s website summed up a loss of patience with the businesses that run these platforms: “Social media companies have failed to police themselves at our kids’ expense, and now Congress must act.”

Chairman Dick Durbin (Democrat - Illinois), Ranking Member Lindsey Graham (Republican - South Carolina), and other committee members asked whether the leaders of these social media platforms sacrificed the protection of children from exploitation while focusing on profits.

Federal legislation such as the Children’s Online Privacy Protection Act (COPPA) exists, though that particular law focuses on the collection of personal information from youths by online platforms such as websites and social media.

There is some divide on how to further protect children specifically from sexual exploitation online. For example, the STOP CSAM Act, referring to child sexual abuse material, is described as a policy to “combat the sexual exploitation of children by supporting victims and promoting accountability and transparency by the tech industry.” The language of that bill, however, has faced pushback from a host of parties who say they want to protect civil liberties such as free expression and halt further government surveillance.

During last week’s hearing, Amy Klobuchar (Democrat - Minnesota) blamed the power Big Tech wields for some of the slowness on the enactment of more national legislation to protect children from online sexual exploitation. More points of concern and outright accusations peppered the committee’s hearing, which included its share of attempted political grandstanding.

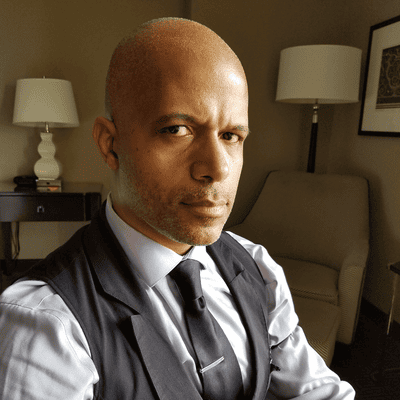

This episode of DOS Won’t Hunt takes a look at what responsibility tech faces when there is malicious use of its resources and services, as well as the execution of safeguards and corrective actions to deal with such issues.

About the Author(s)

You May Also Like