DevOps and Security Takeaways From Twitter Whistleblower Claims

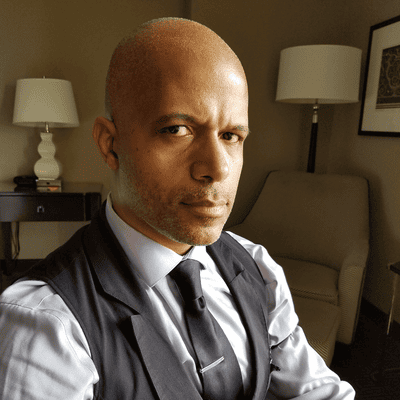

Allegations from whistleblower Peiter ‘Mudge’ Zatko, Twitter’s former head of cybersecurity, raise questions about observability, access rights, and the pressures on developers.

When news broke that Peiter “Mudge” Zatko, the former head of cybersecurity at Twitter, went whistleblower, alarm bells rang that may resonate with other enterprises.

Mudge, as Zatko is known among cybersecurity researchers, has credentials that extend back 30 years and include hacking thinktanks and leading research projects at DARPA. He was let go from Twitter in January and has since made claims of lax oversight by his former employer regarding security of information, data, and unchecked access to such sensitive areas of the company. Those accusations include assertions that foreign states, such as Russia and China, could take advantage of the alleged vulnerabilities.

As his disclosures continue to be vetted, other enterprises may want to examine their own processes and controls on permissions and access rights at a time when developers might be pushed to work fast.

An organization such as Twitter probably has guidelines for how to handle data that is the most critical and personally identifiable, says Kevin Novak, managing director of cybersecurity with Breakwater Solutions. Such policies might say access is provided on a “need-only” basis, he says, but Zatko’s concerns put Twitter in the spotlight, especially if more people than necessary have access to information they do not need. “They could influence that information, access that information, change processes about how it is used,” Novak says. “It’s just over-empowering.”

It can be hard for large enterprises to follow through on their own guidelines, he says, because of the time and effort, and balancing the needs of the staff with that of management.

Constant Push on Developers

There is pressure on developers, Novak says, to update and deliver products through constant iterative development. “There’s that constant push for developers to have free rein to be innovative,” he says. This can lead to enterprises taking risks and granting developers carte blanche. “It’s really why you need a really robust, secure software development lifecycle set of guidelines and principles,” Novak says.

Governance that allows for free rein within certain guardrails, he says, is necessary for companies. This can let developers work in an agile, innovative environment in a way that does not violate certain principles. While such practices seem simple enough to follow, there may be temptations to move as fast as possible regardless of possible risks. “Companies that don’t put those governance guardrails in place are just trying to get their market share, because they recognize that speed to market has become a critical component of being able to gain market share,” Novak says.

Access control for data and the development process can be a challenge for most companies, says Kenneth White, security principal with MongoDB. “What’s striking here is … just how widespread access is, possibly without logging or visibility for core productions systems,” he says.

“That’s certainly not the norm and is troubling.”

Strict Change Controls Needed

If organizations do not know if something happened, they would not know why something was touched by engineers, White says. This can limit the ability to roll back changes to a production system, which could elevate risk. The modern, agile development world that surrounds DevOps often lends itself to constant deployment of code, he says, with changes made all the time. Even constant updates need to be carefully managed with strict change control, White says. “Knowing exactly what was changed, who changed it, and being able to revert that is a foundation principle of modern development practices.”

It is not unheard of for hyperscalers and larger tech companies to make it possible for a large group of engineers to deploy changes on production, he says. “What’s critically important is it’s a managed change. It’s reversible; it’s observable; it’s auditable.” Being oblivious to who made changes and why, lack of knowledge of what precisely happened and no process for rolling back changes is recipe for calamity, he says.

Many organizations give developers a wide berth to make changes or updates to their own systems, White says, and large, engineering-oriented organizations may do that in production. “The context in which those changes are made is critically important,” he says. “It’s not some magic formula.” Leaving things unchecked does not mean faster innovation and time to market with products and services, White says.

Top-down leadership from the C-level must be engaged in laying out and enforcing oversight and processes to minimize risk, he says. “The mechanics, specific implementations, and tools are often chosen by the folks on the frontline, that's completely appropriate, but choosing not to have change control, choosing not to have any kind of mature software practices, skipping the auditing--those aren’t things that are debatable,” White says.

What to Read Next:

Black Hat at 25: Why Cybersecurity Is Going to Get Worse Before It Gets Better

About the Author(s)

You May Also Like

Smart Service Management

June 4, 2024Tales of a Modern Data Breach: The Rise of Mobile Attacks

June 11, 2024The CIOs Guide to Enhancing GRC in 2024

June 20, 2024