Connection Draining enables AWS customers to shutter unneeded virtual servers without disrupting users' in-flight requests to apps.

Amazon has a new service, highlighted in a tweet by AWS CTO Werner Vogels on Thursday: Elastic Load Balancing now supports Connection Draining, he said, calling it "excellent news."

Amazon's Elastic Load Balancing service enables a busy application to spread incoming traffic over more than one instance of the application for better response times. When combined with Amazon's Auto Scaling service, the two act together to dynamically allocate and reallocate resources in Amazon's Elastic Compute Cloud to suit a customer's needs. Just as traffic often increases, it also decreases, necessitating a scaling back of resources.

That's where Connection Draining comes in. It's needed during a workload's scale-back period in the cloud. When traffic diminishes from a peak, instances and network connections must be shut down. Closing network connections risks disrupting application responses that are still in the delivery stage. If they're closed clumsily, users may see page freezes or file download disruptions.

"You'd like to avoid breaking open network connections, while taking an instance out of service, updating its software, or replacing it with a fresh instance that contains updated software," wrote AWS cloud evangelist Jeff Barr in a blog post on Thursday. Breaking them inadvertently means frustrated customers somewhere, he noted.

[Learn more about Cloud Services And The Hidden Cost Of Downtime.]

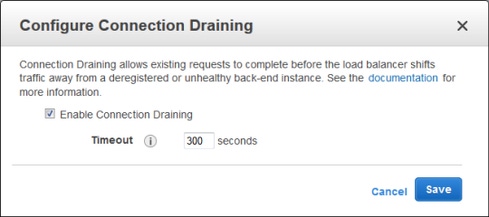

By applying Connection Draining to Elastic Load Balancing, Amazon customers can avoid that disruption. The customer can designate a "timeout" period between when an instance dies and when the network connections that served it will close. By building in a lag time, user responses may finish delivering, even after the instance has been eliminated. A timeout may be one second or 60 minutes, if the customer wants a long safeguard period.

As the instance disappears off the load balancer's registry, the load balancer still knows how long it should allow in-flight requests to the workload to complete, and also knows to send no new requests to the discontinued virtual machine. Once the time-out period is exhausted, however, the network connections are closed, and no remaining in-flight requests will complete.

Connection Draining can be activated on the AWS Management Console at the customer's datacenter. Customers must be using the latest version of the console. Clicking on the small blue cartoon bubble in the tool bar at the top right corner of the console enables the new console version to load, Barr wrote. A manager can click on a load balancer, modify it by enabling the new Connection Draining capability, and save the change. A customer can also implement it through the AWS command line interface or by calling the Modify LoadBalancerAttributes function in the load balancer API. The feature can be added to an existing load balancer and will be added by default to new load balancers.

Connection Draining is the sort of enhancement to a cloud service that allows it to operate in a manner more similar to on-premises applications. System administrators in an enterprise datacenter don't have to worry about physical network connections disappearing, so all in-flight requests are completed. But attempting to manage a cloud workload in a similar manner has its awkward moments, one being when the instance needs to be shut down but the manager can't tell whether all its end-user requests have completed.

For AWS customers with fluctuating traffic acting on their web servers and applications in EC2, Connection Draining is another way to smooth out the differences between workloads running on premises and the same workloads in the cloud.

Engage with Oracle president Mark Hurd, NFL CIO Michelle McKenna-Doyle, General Motors CIO Randy Mott, Box founder Aaron Levie, UPMC CIO Dan Drawbaugh, GE Power CIO Jim Fowler, and other leaders of the Digital Business movement at the InformationWeek Conference and Elite 100 Awards Ceremony, to be held in conjunction with Interop in Las Vegas, March 31 to April 1, 2014. See the full agenda here.

About the Author(s)

You May Also Like