Pivotal deal offers five analytical options and, in a swipe at Cloudera and Hortonworks, unlimited Hadoop storage without charges by the terabyte.

Plenty of Hadoop software suppliers are now advocating storing all data in a Hadoop-based data lake (or "enterprise data hub," in Cloudera's lingo). But that's more than a little self-serving, given that they're charging for software support by the node.

"They're taxing you for every terabyte," said Michael Cucchi, Pivotal's senior director of product marketing, in a phone interview with InformationWeek. "We know that won't work, so we're moving our big-data platform to a new licensing model."

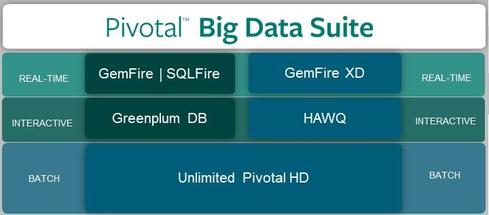

With the Pivotal Big Data Suite subscription, which was announced on Wednesday, you pay by the processor core used by its analytic engines, not by the terabyte of its Hadoop distribution, Pivotal HD. And in contrast to dedicated Hadoop vendors, Pivotal offers an array of analytic engines: the Greenplum massively parallel processing database, the GemFire in-memory object grid, the SQLFire in-memory database, the HAWQ SQL-on-Hadoop engine based on Greenplum, and its just-released GemFire XD in-memory engine. Like HAWQ, GemFire XD runs on top of Hadoop, and it's an alternative to the up-and-coming Spark project.

[Want more on Pivotal's alternative to Spark? Read Pivotal Brings In-Memory Analysis To Hadoop.]

Pivotal reasons that the value of data is in its analysis, not in the container. That's spot on, and it's why Cloudera has recently attracted more venture capital investment than any other Hadoop vendor. Not that the other Hadoop distributors are hurting for investment, but Cloudera has stood out in building out analytical capabilities (like Impala and ties to Spark) on top of Hadoop.

Only IBM can be compared to Pivotal in offering an array of analytic options as well as a Hadoop distribution, Cucchi said. Oracle and Teradata, for example, have to partner with Hadoop vendors. Meanwhile, Hadoop-only vendors don't have databases, and the maturity and mix of technologies and open-source projects they rely on vary widely, to say the least.

"Many companies want to add a data lake, but they feel trapped," Cucchi said. "They don't know which technologies they'll need or which style of analysis they'll end up doing. We're taking the pain points off the table and removing the requirements to make choices."

With Pivotal's all-inclusive subscription, you can use any of its analytic engines, and you can change the mix of core license usage as your needs change. You also get unlimited, all-you-can-eat support for Pivotal HD, so you can store as much as you want and deploy 10 nodes, 100 nodes, or 1,000 nodes without facing additional support costs.

One catch here is that the subscription covers only software and support, so you'll have to pay for whatever compute and storage capacity you need (and, yes, there's a big difference in the hardware costs of 10 nodes versus 1,000 nodes). But customers have flexibility in that the software can be used with on-premises servers and storage, virtualized capacity, or cloud compute and storage nodes on Amazon Web Services or another public cloud.

The other catch is that the Pivotal Big Data Suite subscription requires a minimum investment and a two-year or three-year term (with discounts for the longer deal). The company is not disclosing costs or terms to the media, but Cucchi said it "looks like pure-play, Hadoop-vendor pricing, not that of big-iron vendors." Translation: think Cloudera/Hortonworks pricing, not IBM pricing.

The appeal of Pivotal's new offer will clearly depend, in part, on whether and to what extent would-be customers are already invested in Pivotal or third-party analytic engines. As for the unknown upfront investment and two- or three-year term, that's a commitment to the Pivotal way. If the depth and breadth of analyses and the size of the lake aren't all that impressive after two years, then the advantages of the analytic bundle and unlimited Hadoop support may be elusive.

IT organizations must build credibility as they cut apps, because app sprawl is often due to unmet needs. Also in the App Consolidation issue of InformationWeek: To seize web and mobile opportunities, agile delivery is a given. (Free registration required.)

About the Author(s)

You May Also Like