Nvidia Talks Ways to Bring Generative AI to Financial Services

The Santa Clara, California-based chipmaker discussed efficiencies in productivity and power consumption through generative AI at the AWS Financial Services Cloud Symposium.

As many companies are discovering quickly, letting generative AI run amok with sensitive information is less than ideal. Nvidia shared insights at last week’s AWS Financial Services Cloud Symposium in New York that might show how financial services organizations can take advantage of such resources.

The compliance concerns of banks, which include safeguarding assets and customer data, could easily raise red flags on unchecked use of generative AI. At the same time, the hype that now surrounds AI is impossible to ignore, even for organizations that operate in heavily regulated sectors.

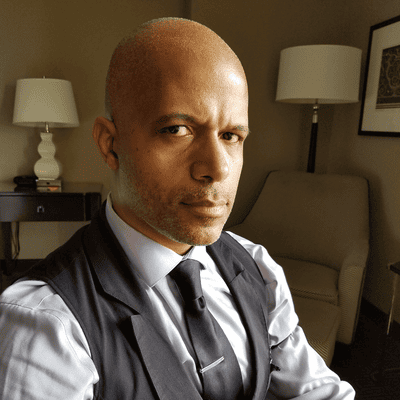

“We are living in interesting times,” said Malcolm deMayo, global vice president for financial services with Nvidia, during his presentation at the symposium, where he called the recent rise of AI the beginning of a series of inflection points. This includes model architecture in machine learning evolving to a transformer model -- what Nvidia describes as neural network that can learn context and with it meaning.

Rise of ChatGPT Lifts All AI Boats

DeMayo spoke about how generative AI has made the AI sector as a whole a greater part of public discourse. He said generative AI is nearly ready or can pass the Turing test for human intelligence. Naturally, he also cited how ChatGPT was trained on more than 10,000 Nvidia GPUs on a supercomputer (built by Microsoft) in the cloud.

Part of the phenomenon now around generative AI is the ease of use, deMayo said. “The only skill you need to use generative AI is to be able to ask a question and it doesn’t even have to be a good question,” he said. “Through conversation, the model will figure out what your intentions are.” Generative AI has also benefited from waves of technology that led to mobile, ubiquitous, handheld computing via the cloud, deMayo said. “Everyone has access to generative AI, virtually everyone on the planet.”

It must be noted that Nvidia is admittedly a significant stakeholder in the AI sector, having invested heavily into it over the past decade.

Generative AI Gets Green

There might be some sustainability benefits that come with the growth of AI and newer chipsets. On the hardware side, Nvidia’s development of DPUs (data processing units) in tandem with more efficient CPUs is, deMayo said, improving speed and performance while reducing power consumption. That could speak to certain ecological concerns as the escalating scale of compute power raises questions about energy usage.

For instance, where traditional CPUs might see a 0.1x increase in processing power each year, deMayo said GPUs and software are accelerating processing power by more than 4x each year, shattering Moore’s Law.

Translating that ramp up in power into energy consumption terms, he said data centers currently consume 2% of the world’s energy. “Unchecked, that’s going to expand to greater than 5% shortly,” deMayo said. Power consumption can already be an issue as demand rises. “You know the horror stories,” he said. “You go into data centers today and you can’t get power to a rack, or you can’t fill a rack because you can’t get enough power to it. So, what happens is we have this massive server sprawl.”

The use of more advanced resources, including GPUs and AI, deMayo said, could help address energy consumption issues by driving greater efficiency and productivity, possibly by a factor of 10 -- without adding headcount. “This conversation is taking place in every C-suite,” he said.

Increased productivity through generative AI can include creating reports, working with data in novel ways, and providing customer service, deMayo said. “It’s really expensive to bring on agents, to bring on bankers and to train them on hundreds of products,” he said, “and to train them to deal with customers who have exhibited a high anxiety level.” Training AI on transcripts from prior calls could assist bankers in the future, he said, to make them more knowledgeable.

If companies decide to use AI, they have choices, deMayo said, between using something already available such as ChatGPT, customizing a model, or developing something in-house -- an effort that could easily cost millions of dollars.

Concerns and Guardrails

He did caution that AI can make mistakes that seem accurate. “These models hallucinate. They do it in a very verbose way, a very articulate way, so it’s very hard to understand if it’s truthful or not,” deMayo said. “Because most of the foundational models were trained on public data there’s going to be some bias, there’s going to be some toxicity. Those are challenges.” Models can also freeze, he said, halting their capacity to respond past the dates their training data came from.

For its part, he said Nvidia is building in certain guardrails should a company want to use its resources to customize or develop an AI model. That includes services to extract extraneous information, remove bias, and toxicity, deMayo said, as well as fact-checking via a knowledge database. “The guardrail is between the AI model and the person asking the question,” he said. “It essentially redirects down to a knowledge database to fact check, ‘Is this answer correct before we send it out?’ If it’s not, the guardrail prevents a response from going out.”

What to Read Next:

ChatGPT Is the Tesla of Generative AI

How Do Supercomputers Fit With Strategies for Sustainability?

Nvidia GTC 2023 Spotlights AI Prospects for Operational Efficiency

About the Author

You May Also Like