Unmanned space missions can generate hundreds of terabytes of data every hour. What's a space agency to do?

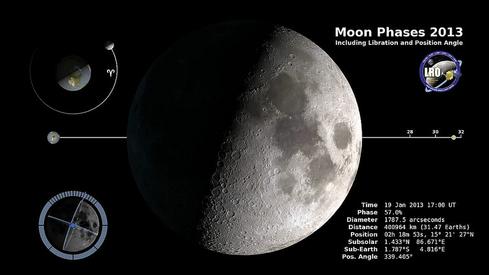

NASA's LADEE Moon Mission: 5 Goals

NASA's LADEE Moon Mission: 5 Goals (click image for larger view)

NASA has dozens of missions active at any given time: Robotic spacecraft beaming high-resolution images and other data from great distances; Earth-based projects surveying polar ice or examining global climate change. As you might imagine, the volume of data generated by these multiple efforts is staggering.

For Chris Mattmann, a principal investigator for the big-data initiative at NASA Jet Propulsion Laboratory (JPL) in Pasadena, Calif., the term "terabytes of data" is hardly daunting.

"NASA in total is probably managing several hundred petabytes, approaching an exabyte, especially if you look across all of the domain sciences and disciplines, and planetary and space science," Mattmann told InformationWeek in a phone interview. "It's certainly not out of the realm of the ordinary nowadays for missions, individual projects, to collect hundreds of terabytes of information."

[ NASA has found a faster way to download data from its missions to scientists on Earth. Read NASA's Moon Laser Sets Data Speed Record. ]

Not surprisingly, massive volumes of data bring formidable challenges, including the enduring big data question: What should we keep?

Not all bits need to be preserved for eternity, of course, and the trick is to determine which to save for the archives, and which to mine for insights but ultimately discard.

At NASA, the goal of some big data projects is to archive information, which means "keeping the bits around and doing data stewardship," says Mattmann.

Data from NASA's Earth Observing System (EOS) satellites and field measurement programs, for instance, is stored in the agency's Distributed Active Archive Center (DAAC) facilities, which process, archive, and distribute the information.

"Their responsibility… is to be the stewards and preservers of the information. It's a fairly large project, and their job is to ensure the bits are preserved, that they hang around."

Some big data projects, however, hinge more on analysis than stewardship. One radio astronomy example is the planned Square Kilometre Array (SKA), which will include thousands of telescopes in Australia and South Africa to explore early galaxy formation, the origins of the universe, and other "cosmic dawn" mysteries.

"In that particular case, there are a lot of active analytics and analysis problems that [researchers] are more interested in than necessarily keeping the data around."

Another example is the US National Climate Assessment, a federal climate-change research project that Mattmann participates in. Its primary role is "to produce better measurements of snow-covered areas, and measurements of snow in areas where dust, black carbon, and other pollutants typically impact the way that satellites see snow," says Mattmann.

"That's an example, on the Earth side, of where it's mainly a big data analytics problem and not a preservation problem."

JPL's big data operations use a lot of open-source software, most notably Hadoop, a development that suits Mattmann and his team of 24 data scientists just fine.

Here's why: Since 2005, Mattmann has been a major contributor to the Apache Software Foundation (ASF)'s big-data efforts.

"I was one of the people who helped invent the Hadoop technology," said Mattmann, who was on the project management committee for a large-scale search engine "that Hadoop kind of got spun out of."

Today, Mattmann sits on the ASF's board of directors.

Open-source projects are "really useful in the context of government, and in terms of us wanting to save money."

NASA, he pointed out, also makes good use of Apache TIKA, an open-source tool for detecting and extracting metadata and structured text from documents, to decipher the 18,000 to 50,000 file formats available online.

"For us, file formats are where all the scientific observations, metadata, and information about the data are stored," said Mattmann. "We have to reach into files, crack them open, and pull this information out, because a lot of it feeds algorithms, analytics, and visualizations."

Bold visions are competing with practical budget realities for federal IT leaders. Our latest annual survey looks at the top IT priorities. Also in the new, all-digital Tech Priorities issue of InformationWeek Government: IT leaders are making progress improving the efficiencies in their IT operations, but many lack the tools to prove it. (Free registration required.)

About the Author(s)

You May Also Like