If some forms of artificial intelligence may put lives at risk, what are some of the options for safer deployment of the technology.

Life is full of risks, some of them technological in nature.

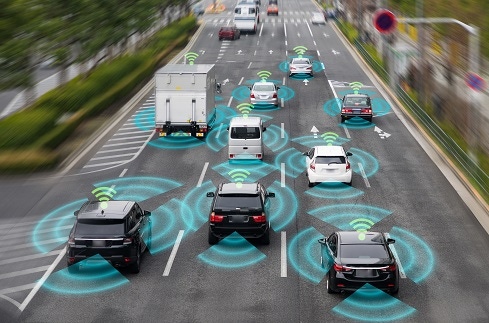

One can easily imagine how almost any technology, no matter how benign its intended function, could put people’s lives in danger. Nevertheless, these imaginative leaps shouldn’t keep society from continuing to roll out technological innovations. As artificial intelligence becomes the backbone of self-driving vehicles and other 21st century innovations, many people are holding their breath, just waiting for something to go dangerously wrong.

It’s not just the nervous nellies of this world who feel trepidations about AI. Even the technology’s experts seem to be engaging in a sort of AI death watch, as evidenced by sentiments such as “"Who Do We Blame When an AI Finally Kills Somebody?,” as expressed in a recent blog by Bill Vorhies, editorial director of Data Science Central.

It would be foolish to overlook the many potentially lethal uses of AI, including but not limited to weapon systems. As AI invades every aspect of our lives, it’s not difficult to imagine how robotics innovations such as those that were announced at the recent CES 2019, can have unintended consequences or be perverted to evil ends that put lives at risk and wreak havoc. For example:

An ambulatory device that has been trained to grapple anything might inadvertently choke someone.

A cargo-carrying robot that tags along a fixed distance behind its owner in public places might block other people’s exit paths in an emergency.

A self-driving vehicle might inadvertently take somebody into a disaster zone or unsafe neighborhood.

But is there anything qualitatively different about AI that makes it intrinsically more perilous than other technologies, past or present? After all, human inventions have been mortality risk factors since our ancestors began to attach sharpened stones to the ends of sticks. Were the original developers of these efficient piercing artifacts to be blamed for all subsequent casualties, down to the present day, that were caused by arrows, spears, and daggers?

People can indeed be held responsible for malicious and negligent uses of technology, and AI is no different in this regard. Many technologies incorporate safeguards that enable their users to prevent accidental injury. Medieval archers kept their idle arrows in quivers. Samurai swaggered with their swords securely sheathed. And firearms come equipped with safeties that keep them from firing in their holsters.

Before long, it will probably be standard for AI-equipped devices and apps to come with their own safeties, and for the legal system to require that these be ready and activated in most usage scenarios. That raises the question of how one would implement an effective “AI safety.”

When lives are at stake, we need to go well beyond the AI risk mitigation concerns that I discussed in this article and the techniques that I examined in this piece from last year. In the latter, I dissected the risks associated with AI behavior stemming from several sources, including rogue agency, operational instability, sensor blindspots, and adversarial vulnerability.

None of these risks, individually or collectively, may constitute a direct threat to life and limb, which means that eliminating them may be no guarantee that the behavior of the overall AI-driven system may not run amok. Instead, avoidance of life-endangering outcomes would need to be addressed at a higher level in the process of modeling, training, and monitoring how the AI operates in controlled laboratory and simulated operating environments.

Currently, a fair amount of AI research and development focuses on techniques for spawning the algorithmic equivalent of “ethics.” That’s all well and good, but as I discussed here a few years ago, we’ll never know with mathematical certainty whether one or more algorithms in practice can always be relied upon to save a life or otherwise take the most ethical actions in all circumstances.

As we begin to consider the potential deployment of “ethical AI” techniques, we face intractable issues of a moral, legal, and practical nature. We can appreciate those by engaging in a “thought experiment” that consists of a cascade of thorny dilemmas:

What happens when diverse AI models in a distributed application (such as fleets of self-driving vehicles), each highly trained in its own ethical domain, interact in unforeseen ways and cause loss of life?

What do we do if any of these trained “ethical AI” models come into conflict in operations, requiring in-the-moment trade-offs that put lives at risk?

What if we build a higher-order “ethical AI” model to deal with these trade-offs, but it proves to be competent only in the limited range of conflicts for which it was trained?

Do we need to build and train an endless stack of higher-order “ethical AI” models to deal with all the potential life-endangering scenarios that may ever occur?

Where do we draw the line and declare that there are no possible life-endangering scenarios, which one or more of the current stack of “ethical AI” models would be competent to resolve?

Considering how rare many potential life-endangering scenarios might be in the historical record, how would we be able to use them as “ground truth” for training the “ethical AI” models that are competent to address them in the future?

In a “Catch-22” sense, having to wait until a sufficient number of life-endangering scenarios are present in historical data to sufficiently train “ethical AI” models may itself be unethical. Lives may be endangered until those future models are put into production. In many ways, this is an issue not so much with the inferencing behavior of the AI models themselves, but with the entire end-to-end process under which AI models are built, trained, served, monitored, and governed. In many life-threatening scenarios of the sort that I’ve sketched out, the entire AI DevOps organization may on some level be to blame for tragic results.

Reinforcement learning

Rather than sidetrack into the legal ramifications of that hard reality, I’ll point out something most of us already know: AI models are increasingly being trained to perform as well or better than human beings in many tasks. In a practical sense, then, we might not need to instill “ethics” into the AI that drives our cars, for more or less the same reason we don’t require people to have some sort of life-affirming spiritual epiphany before receiving their driver’s license. If we can train AI to avoid engaging in life-endangering behaviors at least as well as normal humans would in the same circumstances, that might be sufficient for most practical purposes.

Reinforcement learning (RL) would seem to be the right approach for standing up AI with these capabilities. Using RL, the AI-guided device or app explores the full range of available actions that may or may not contribute to its achieving desired outcomes (include avoidance of scenarios that may endanger lives).

RL plays a growing role in many industries, often to drive autonomous robotics, computer vision, digital assistants, and natural language processing in edge applications. Staying with the autonomous vehicle example, one could train RL models on “ground truth” data gained from observing how well-trained, responsible humans operate motor vehicles. For modeling those real-world scenarios where such data is sparse, one might leverage equivalent data gathered from people in driving simulators. All of that data, in turn, could be used to build RL models that are trained to maximize a cumulative reward function associated with reinforcing life-protecting behaviors in a wide range of scenarios, including speculative and rare edge cases. By the same token, those models can also be trained to avoid life-endangering behaviors in practically any scenario seen in the historical record or amenable to simulation.

RL has matured over the past several years into a mainstream approach for building and training statistical models, even in operational circumstances where there is little opportunity to simulate operational scenarios before putting its AI into production. As I spelled it out here, it’s quite feasible to use RL to train robots to behave safely in many real-world scenarios. In fact, there is a rich research literature on using RL to tune the safety features (e.g, geospatial awareness, collision avoidance, defensive maneuvering, collaborative orchestration) of self-driving vehicles, drones, and robots.

If you’re a developer who’s doing RL training, this might be a long, frustrating, and tedious process. You may need to iterate and retrain the RL model countless times until it operates with sufficient reliability, accuracy, and efficiency in the life-or-death inferencing scenario for which it’s intended.

Considering the stakes, this due diligence may be absolutely necessary and perhaps mandated. It’s not a responsibility that you, the AI developer, should take lightly.

About the Author(s)

You May Also Like