Negative feedback adds a brake to the irrational artificial intelligence (AI) exuberance.

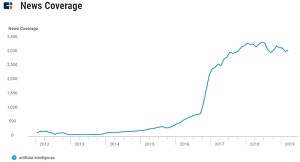

Every new technology goes through a hype cycle in which the news coverage is strongly positive at first, often gushing with the possibility for life-altering transformation. Even though AI is not new, dating back to the 1950s and having already experienced hype cycles, the cycle during 2016-2018 has been notable for the sheer volume of news coverage.

Though some luminaries wondered aloud over the last several years about the darker side of AI and put forward several dystopian scenarios, most of the news articles were about the amazing things that could be done with the technology. Media focused on how AI would transform nearly every industry and put a spotlight on the huge amounts of money being invested in novel startups aiming to lead the AI revolution. The AI Index 2018 Annual Report shows sentiment of AI news coverage being 1.5 times more positive during 2016-2018 than during the several preceding years.

Today it is broadly assumed that AI will have a transformative impact, even more so than the World Wide Web. The recently completed Edelman 2019 AI survey revealed that 91% of tech executives and 84% of the general public believe that AI constitutes the next technology revolution.

Negative feedback disrupts the hype cycle

But as happens with every cycle, the challenges associated with the adoption of the technology eventually emerge, and the news cycle begins to change to probe the problems. The tone of the coverage turns more worrisome, even dark, exploring potential pernicious applications and the negative consequences that could arise.

That’s certainly been true over the last several months, with recent reputational concerns about the creation of a “useless class,” deepfakes that pose a very real threat to truth and trust, and from inherent biases in the algorithms. An additional concern is that many of the AI algorithms are “black boxes” -- the operation of which and their results cannot be readily explained. These are legitimate topics for exploration and serve to cool the hype. However, in some cases the concerns could be overdone or at least misplaced.

Most leading AI applications today are based on deep neural networks that require large data sets for training algorithms. Consequently, the AI is only as good as the data it is fed, and early development and deployment have exposed biases. As a specific artificial intelligence learns, it picks up the biases it finds in the data and can also reflect the inherent biases of those who build the algorithms. Thus, the technology can perpetuate or even amplify existing human foibles and beliefs including racial, gender, and cultural stereotypes in tools such as facial recognition, customer service, marketing, content moderation, employment and loan decisions. A ProPublica story highlights this bias problem in software used to predict if someone convicted of a crime is likely to reoffend. The problem is not with the technology so much as it is with society as highlighted in a recent Fortune article. The authors note that though superficially objective, the algorithms inherently express societal norms and biases.

Finding balance in approaching AI

Getting rid of bias is difficult as it is innately ingrained in the data and human behavior. However, there may be a way to purge biases from algorithms (ironically) through another application of AI. The Wall Street Journal reported that fighting biases in artificial intelligence could get easier for businesses due to the recent availability of automated fairness tools that can check for bias creep in AI models. Armed with these tools, data scientists are then able to clean their data, amend their algorithms or provide an additional layer of human oversight to ensure fairness in the recommendations of AI applications.

Another area of concern is that people do not understand how AI algorithms work, meaning that the operations of these are not explainable; especially so for deep neural networks. An MIT Technology Review story notes that “by its nature, deep learning is a particularly dark black box,” the functions of its algorithms opaque to even those who create them. As these algorithms grow in capabilities, the decisions they influence are increasingly opaque and thus less accountable. Articles in Science and The Guardian go so far as to describe AI as alchemy or an exercise in magical thinking.

This has led to calls for business to be as transparent as possible to explain the operation of their algorithms. IBM has suggested creating a “Supplier’s Declaration of Conformity” that would be completed and published by companies who develop and provide AI to address both bias and opaqueness. The document would show “how an AI service was created, tested, trained, deployed, and evaluated; how it should operate; and how it should (and should not) be used.”

While most surely agree that bias is a problem, not all are convinced that the black-box nature of complex algorithms is equally troublesome. Wired co-founder Kevin Kelly has argued that technology, and by extension AI, is a projection of the human mind. The mind serves as the basis for modeling neural networks. That we cannot completely understand how a multi-layer neural network works is perhaps not surprising as we humans cannot easily explain our own thought processes, either. But we don’t automatically reject the conclusions we derive or really question the leaps of logic and intuition that sometimes lead to breakthroughs. Instead we refer to these sudden phenomena as unexpected illumination, enlightenment, insight and genius.

This is similar to an argument made recently by Carnegie Mellon Professor Elizabeth Holm as reported by Singularity Hub. She asked if AI black boxes are all bad and concluded surely not, because human thought processes are black box. As reported, her view is that we often rely on human thought processes that the thinker can’t necessarily explain. Holm added that asking people to explain their rationale has not eliminated bias, or stereotyping, or bad decision-making.

Worries about bias and black box algorithms are entirely legitimate even if they are overblown or can be corrected. This serves to correct the hype and helps to focus the industry conversation on issues of real import. After all, hype is akin to irrational exuberance, reflecting a system that is out of balance with respect to objective reality. This condition is inherently not sustainable and, from a systems theory perspective eventually negative feedback -- usually subtle in the beginning but becoming more pronounced if the system does not reset to normal limits -- corrects the overreach and restores equilibrium. The very discussion around bias and black box algorithms have prompted deeper thought and solutions.

Given the potential transformational impacts from AI that arguably have deeper implications for humanity than any technology to date, this disruption of the hype serves as a form of pumping the brakes, hopefully keeping us from careening out of control towards an uncertain future.

{Image 2}

Gary Grossman is Senior Vice President and Technology Practice Lead, Edelman AI Center of Expertise.

About the Author(s)

You May Also Like