Can the trendy tech strategy of DevOps really bring together developers and IT operations to deliver better apps faster? Our survey shows a mixed bag of results.

Download the entire

Download the entire

Jan. 6, 2014, issue of InformationWeek,

distributed in an all-digital format (registration required).

IT leaders need to stop lying to themselves. Sure, agile development and virtualized datacenters help them deliver better results. But have technology organizations really made the leaps necessary to improve IT reliability and, even more important, IT's ability to pounce when the business sees opportunity? Instead, IT organizations struggle to keep up with never-ending changes to their tech environments, especially when installing and upgrading applications. On one side, the IT operations zealots want to keep the environments as stable as possible, because they're judged on uptime and cost. On the other side, the crazy developers want to constantly change or add apps, because they are praised for pushing new features to customers, partners, and employees.

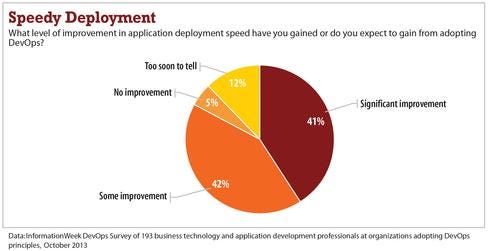

The desire to resolve this tension explains the growing love fest for DevOps, an IT methodology that promises improved reliability and efficiency, lower costs, faster response times, and better communications among teams. Twitter, Netflix, and Facebook say they wouldn't be able to implement their tech strategies without DevOps. Eight of 10 companies in our InformationWeek DevOps Survey adopting DevOps approaches say they've realized or expect to see at least some improvement in app deployment speed and infrastructure stability as a result.

Think of DevOps as agile software development for the entire IT life cycle. Teams not only write code in short iterations, but they also test and even implement it in similar bursts, using version-control tools and more highly automated datacenter configurations. DevOps is meant to blow away the mentality of developers writing code and then throwing it over the wall to the datacenter team to figure out how to efficiently run it. In that way, DevOps has its roots in the lean manufacturing world, which fights the problem of engineers designing gear that the factories can't afford to build.

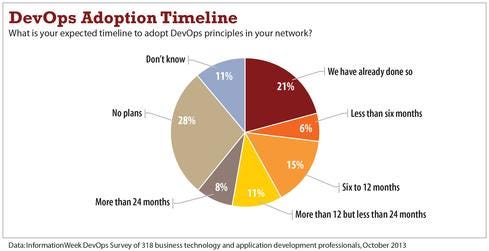

What's not to love, right? But while 75% of tech pros know about DevOps, according to our survey, only 21% of those familiar with it are using it, though another 21% say they expect their organizations to adopt DevOps principles within a year. Those organizations with no DevOps plans say other priorities take precedence, there's no demand for what DevOps promises, or they lack the resources or expertise to implement it. One in five blames confusion around the DevOps concept. "The term sounds like a job role and not a process, so many manager/executive-level people don't want to hear about it," one tech pro said. One-fourth cite lack of cooperation by IT operations, developers, or both.

Figure 2:

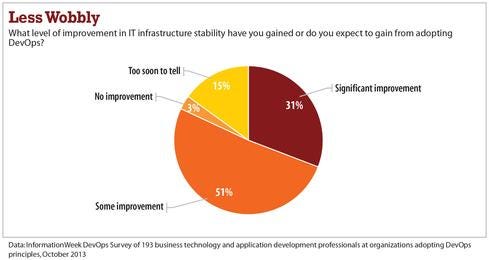

Other survey respondents just aren't wild about the results from DevOps. "DevOps is a mixed bag," one respondent said. "We have issues with quality control." While 31% have realized or expect significant improvement, for example, 51% say it's only "some improvement." However, that leaves a mere 3% who have seen or expect no improvement, plus 15% who think it's too soon to tell.

In concept, DevOps is hard to argue against -- who's against cooperation, speed, and stability? The goals most often cited in our survey are to deliver application updates faster with less downtime, improve IT's ability to track and respond to changes in the infrastructure, and get more visibility into network and application performance. But as the performance numbers in our survey suggest, getting blockbuster results from DevOps might be more difficult than it sounds. That's because DevOps is a methodology, not just a technical tool that IT implements. It takes significant cultural changes along with adoption of technical elements such as automated datacenter configuration.

The role of automation

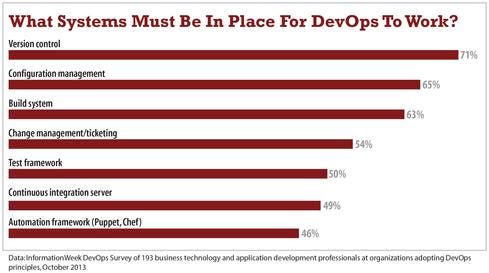

The critical technical foundations of DevOps include software version control, automated configuration management of IT infrastructure, automated virtual machine deployments, and network management. Our survey finds the three most-cited tools used in DevOps are custom scripts (52%), Puppet (29%), and Chef (20%). Puppet and Chef are tools that provide automated configuration management. As an example, let's look at how an application deployment to a virtualization cluster or even a cloud provider such as GoGrid or Amazon Web Services happens now and how it looks different under DevOps.

Without DevOps, an IT operations team meets with the development team to find out how much storage it needs for a new app, which components the application uses (Apache this, Java that), which version of each component developers are using, and how the components communicate with one another. Being good engineers, the IT ops pros draw the setup in Visio, everyone reviews and agrees, and ops manually configures each system according to that agreed-upon architecture.

Figure 3:

Too often, once the enterprise rolls out the new app, it fails because of an error that developers say they never saw in testing, and the ops pros throw up their hands, saying they followed the agreed-upon architecture exactly. The developers go back and figure out what went wrong, and once the problem is fixed, everyone is told to not touch anything. Six months later, this process happens again when developers release an updated version.

Under DevOps, automation tools such as Puppet and Chef help alleviate this problem by requiring the developers themselves to describe the infrastructure configuration and have the tools automatically deploy the servers, set up Apache or Java with the proper versions, and configure firewall ports. As developers test the application during code iterations, they're doing so on the company's agreed-upon architecture, so when the app moves into production the process is usually much smoother.

Let's keep the big picture in mind, though. Although these tools are important, they're a means to an end -- an IT architecture that puts apps in end users' hands -- and it's the end architecture that must be defined, discussed, iterated on, designed, and then implemented within the tool. As one respondent to our survey said: "The key point to remember is DevOps is much more about culture than it is around tooling. ... Both DevOps and Agile borrow key concepts from lean manufacturing, so it's all about communication and openness."

The DevOps methodology forces the various IT teams into a more mature process that puts less trust on the individual team members to drive results and instead requires trust in repeatable and reliable processes for tasks such as virtual machine configuration.

Back to our example of server configuration: Under DevOps, IT wouldn't rely on a single engineer who "knows how it was set up before." Instead, IT uses a configuration that's documented, easily repeatable by developers running the tools in a lab, and, most important, validated by both development and IT ops. If the DevOps process works, there should be less finger-pointing, less reliance on tribal knowledge of how all the knobs and buttons must be set in the infrastructure, and more focus by team members on the end goal. That end goal is the IT architecture, and ultimately the experience that employees or customers get from the apps.

Architecture before automation

DevOps uses a circular methodology, whereby the output of the last iteration of the IT environment feeds the inputs of the next iteration. For example, when IT installs an application, the environment must open ports through the firewall. But say the app doesn't work because developers forgot to mention that the app needs to talk to Active Directory, and that requirement wasn't in the list of ports they wanted opened in the firewall. The iteration to fix that problem must be skewed toward spending more time in architecture and modeling of the processes and infrastructure so developers don't make that mistake again. Chef and Puppet tools provide configuration management for a variety of packaged and custom software, including operating systems, applications, and databases.

The real goal of these DevOps tools isn't to get the configuration files for these applications syntactically perfect. It's to serve the larger goals, such as keeping an e-commerce system running. Doing so requires managing the entire infrastructure that the configuration files create, and checking that the infrastructure they build matches the designed architecture.

Figure 4:

For example, the architecture might describe how the environment should react to keep a system up when the database server fails because of a lost network connection, a failed drive, or even too many users accessing it. Yet when someone installs the configuration, the application uses the IP address of the database server instead of the virtual IP of the database cluster, so losing the primary server breaks the connection from app to database. If the configuration had matched the architecture, the failover would have occurred properly.

IT teams set up DevOps tools to respond to bad events in the infrastructure, such as outages from broken servers, but also to good events, such as increased demand for an e-commerce application. In either case, IT can automate creation of new environments -- such as spinning up duplicate virtual machines to handle overcapacity -- to immediately fix these common problems.

Of course, there's a catch: The very tools that automate the infrastructure, such as Puppet, Chef, or custom scripts, can drag down the infrastructure if they fail. One bad script could bring down your infrastructure, just as one bad patch update did before DevOps. An essential part of the IT architect's work is knowing the details of how the infrastructure's automation works in order to determine the impact an application change will have.

Not everyone is on board with DevOps. In our firm's consulting work, the arguments we hear most often against DevOps are that it dumps more responsibility onto developers, that developers should focus on coding and let the IT ops people deal with servers, and that having standard and staging servers offers enough reliability.

The doubts come from both sides: 23% of respondents adopting DevOps say developers are the ones resisting the methodology, 38% blame network/datacenter folks, and another 39% blame both. Certain IT pros also have a legitimate fear of losing their jobs: If existing engineers can't understand the architecture and all the infrastructure components, and then they write scripts to automate the DevOps processes, they won't fit in a DevOps environment. Although 59% of the respondents to our survey say they have the proper scripting expertise on their network and systems team to implement DevOps, 38% of those are just now developing the skill set.

Everyone on board: The security example

The DevOps name implies that only developers and IT operations can benefit from the methodology. Not true. We've seen how IT security, audit, and governance teams can work more effectively and respond to business change better using DevOps. Sadly, many people don't see things that way: Only 45% of tech pros adopting DevOps say they expect it to improve security; 32% see DevOps having no impact, good or bad, on security; and 7% think DevOps will make the IT operation somewhat less secure.

To sell the security team on DevOps, let them sit in on the deep technical discussions that team members must have to develop the associated architecture models. Those discussions are pure gold for spotting security risks.

Figure 5:

For example, one large company was spinning up a DevOps-driven development project using a private-cloud provider, and it added a security engineer to the discussion. That engineer was shocked to learn that all the scripts the team planned to use for automated provisioning and network changes stored user names and passwords in the scripts and used domain-administrator-level accounts. That security flaw meant anyone who had access to the scripts could log in to the network and gain full access to all the servers and data. Was this just how DevOps had to be done? After poking around, the worried engineer learned that the company was storing domain-level accounts in all kinds of batch files, scripts, and application configuration files -- all in plain text accessible to pretty much all Active Directory users. He started a company-wide fix right away.

Let's face it, both security and operations teams generally have a "say no" culture. Getting those teams in the same room implementing the DevOps methodology will make deployments go more smoothly, because both teams can gain trust in the automated processes and tools. Working together, they can automate even complex tasks, such as separating data access by role, which also makes them easier to audit.

Nick Galbreath, former director of engineering at Etsy, a website for selling handmade crafts, says Etsy used the same configuration scripts that automate deployment of computing capacity to also assert security controls, such as requiring SSL or checking whether certain ports are open. Misconfigurations get reported automatically. Taken a step further, when a system is configured using a tool such as Puppet, the system could require an automatic vulnerability scan before putting the new apps on the production network.

You aren't Etsy or Facebook

Web companies such as Facebook, Netflix, and Etsy are big public advocates of DevOps. Etsy technical blogs are great at describing how DevOps tools can work at massive scale.

But you're not Etsy, so you can't just copy its model. You need to start at the architecture stage and model for your very different business needs, and you need to accept that the necessary cultural change will take time.

Web companies, for example, do a huge number of code changes but have very few applications -- the opposite of most enterprise IT organizations, which have a maze of legacy apps for which they try to minimize changes. For example, in a single month (January 2011), Etsy made 517 code deployments to a Web application production environment that served 1 billion page views. Compare that with the organizations represented in our survey; 96% typically move fewer than 60 application deployments into productions in an entire year. Conversely, Etsy has essentially one major application, while 23% of the organizations represented in our survey have more than 60 applications.

You probably have systems from the 1980s and software documentation that might as well say "Here Be Dragons," because no one really knows how those systems work and the mindset is just don't touch that code. Startup Web companies have the luxury of being able to set up their teams from the start for the integration of IT ops and development -- the entire IT life cycle -- to emphasize open communication, continuous development, and shared architecture knowledge.

Given these vast differences, why should traditional IT shops try to mimic the DevOps agility and automation of the Web giants? The reason is that both Web companies and traditional enterprises share a need for speed and efficiency. To those 46% of survey respondents who say they have more pressing technology or business priorities, or the 33% who see no business demand for DevOps, we say hogwash. Companies of all kinds want to give customers and employees new capabilities faster, and few companies have a culture whereby IT can respond reliably and quickly enough to business changes.

Agile development has solved only half the problem. DevOps is a powerful methodology to get all of IT to ditch broken processes and systems that slow down innovation and to spend more time on opportunities.

Michael A. Davis is CTO of CounterTack. Write to us at [email protected].

Download the entire Jan. 6, 2014, issue of InformationWeek,

distributed in an all-digital format (registration required).

About the Author(s)

You May Also Like