What Just Broke?: Should AI Come with Warning Labels?

AI still has a lot to learn, but does the zeal to explore its possibilities overshadow its encoded flaws to the detriment of an unaware public?

Of late I have sat in on some conversations among people who are obviously much smarter than me and enjoy much higher pay grades discussing the transcendence and transformation of AI -- generative AI in particular.

While I cannot name-drop or repeat their perspectives, I will speak to some going concerns I have about such discussions on AI.

What I do not hear enough about are the built-in shortcomings of AI, and not just breaking points where algorithms go belly-up. I am talking about flaws in how AI might be trained up in the first place, the bias that gets baked in from the start. Problems that are features rather than bugs in the system.

Oftentimes there is some general acknowledgement that AI is still in development and has much more to learn before it can take over the world. Missing from that acknowledgement is a detailed breakdown of how AI might function as coded yet produce flawed or tainted results by design.

Listen to the full episode of "What Just Broke" for more:

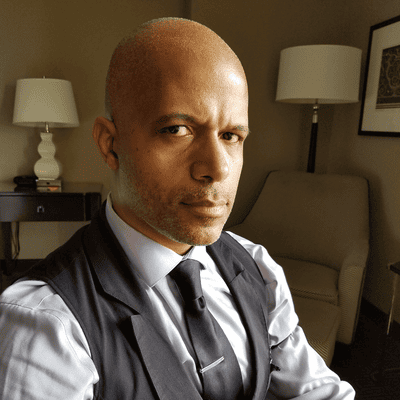

About the Author(s)

You May Also Like

How to Amplify DevOps with DevSecOps

May 22, 2024Generative AI: Use Cases and Risks in 2024

May 29, 2024Smart Service Management

June 4, 2024