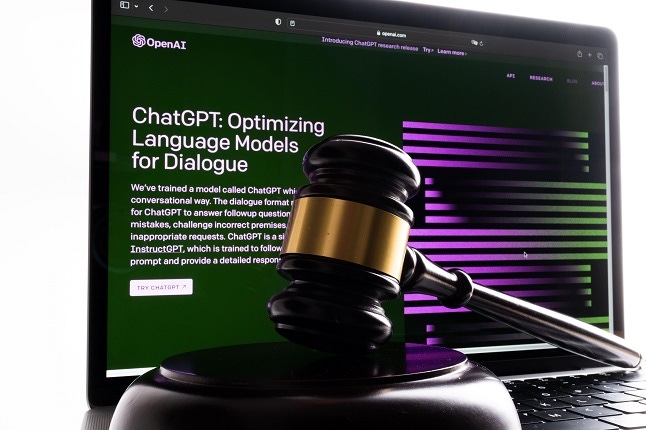

OpenAI’s ChatGPT Generates Lawsuits Over Data Use

A new class-action lawsuit alleges the generative AI software stole large amounts of unauthorized personal for training, while another suit says ChatGPT uses unauthorized copyrighted works.

In just several months, artificial intelligence generative-text software ChatGPT has jump-started a sagging tech industry and spawned billions of dollars in AI investments around the world.

But a pair of lawsuits filed Wednesday -- one claiming OpenAI (parent company of ChatGPT) secretly used stolen data from unsuspecting users, and another accusing the company of stealing from copyrighted books -- are echoing calls for more regulation for the rapidly evolving emerging tech.

The first lawsuit blames OpenAI’s alleged data misuse on the 2019 restructuring that opened the technology to for-profit ventures. “As a result of the restructuring, OpenAI abandoned its original goals and principles, electing instead to pursue profit at the expense of privacy, security, and ethics. It doubled down on a strategy to secretly harvest massive amounts of personal data from the internet, including private information and private conversations, medical data, information about children, essentially every piece of data exchanged on the internet it could take -- without notice to the owners or users of such data, much less with anyone’s permission,” the lawsuit says.

A Temporary Freeze for ChatGPT?

The first suit seeks to force OpenAI to abandon use of unauthorized private information and create safeguards and regulations for its products and users -- and calls for a temporary freeze on commercial use and development of OpenAI’s products until the company has implemented more regulations.

Bob O’Donnell, president, founder, and chief analyst at TECHnalysis Research was not surprised at news of the lawsuits. “I think its going to be very challenging to stop or pause progress already made here,” he says.

However, he adds, “most companies have not been transparent about what data they did or didn’t take. Even if it’s a bunch of lawyers trying to make money off the incredible success of ChatGPT, it does raise legitimate questions about data sources.”

The suit uses examples of many anonymous plaintiffs who allege private information was used for commercial purposes without consent. One plaintiff, a software engineer, is concerned that ChatGPT has “taken his skills and expertise, as reflected in his online contributions, and incorporated them into products that could someday result in professional obsolescence for software engineers like him,” the lawsuit says.

The 157-page lawsuit is filled with such examples of people claiming OpenAI’s products stole information without their consent. The lawsuit starts off with a scathing assessment of OpenAI’s conduct as ChatGPT’s use has skyrocketed since its December 2022 public release. “Defendants’ disregard for pricey laws is matched only by their disregard for the potentially catastrophic risk to humanity,” the lawsuit says. “Despite established protocols for the purchase and use of personal information, defendants took a different approach: theft.”

The complaint says its not just ChatGPT users falling victim to unauthorized use, but anyone who uses applications with ChatGPT integrations, like Snapchat, Stripe, Spotify, Microsoft Teams, and Slack.

Meanwhile, various companies, including IBM, Microsoft (which is a major backer of OpenAI), Accenture, and many other tech players, are launching multi-billion-dollar efforts to bolster their generative AI capabilities. “Powerful companies, armed with unparalleled and highly concentrated technological capabilities, have recklessly raced to release AI technology with disregard for the catastrophic risk to humanity in the name of ‘technological advancement,’” the lawsuit says.

ChatGPT Lawsuits Piling up

Also on Wednesday, another class-action lawsuit claimed ChatGPT’s machine learning was trained on books without permission from its authors. The complaint was filed in a San Francisco federal court and alleged ChatGPT’s machine learning training dataset came from books and other texts that are “copied by OpenAI without consent, without credit, and without compensation.”

Daniel Newman, chief analyst at Futurum Research, says data protection remains a critical concern with AI applications. “We have definitely hit the inflection point where the speed of rolling out generative AI and the potential implications around data rights and privacy are coming to a head,” Newman tells InformationWeek. “While I believe the critical importance of AI will win out in the long run, the rapid deployment has created vulnerabilities in general of how data is ingested and then used. It will be critical that the tech industry takes this issue seriously…”

InformationWeek has reached out to OpenAI for comment and will update with any response.

What to Read Next:

ChatGPT: An Author Without Ethics

About the Author(s)

You May Also Like

How to Amplify DevOps with DevSecOps

May 22, 2024Generative AI: Use Cases and Risks in 2024

May 29, 2024Smart Service Management

June 4, 2024